6 Polynomial Interpolation

6.1 Interpolation Conditions

One of the most fundamental techniques in computational science is interpolation: Given a set of points \(x_i\in\mathbb{R}\) and a set of corresponding data \(y_i \in \mathbb{R},\) \(i=0,\ldots, n,\) we look for a function \(f\colon\mathbb{R}\longrightarrow \mathbb{R}\) such that the interpolation conditions \[\tag{6.1} f(x_i) = y_i,\qquad i=0, \ldots, n\] are fulfilled. Obviously, the conditions (6.1) prescribe the values of \(f\) only at the points \(x_i.\)

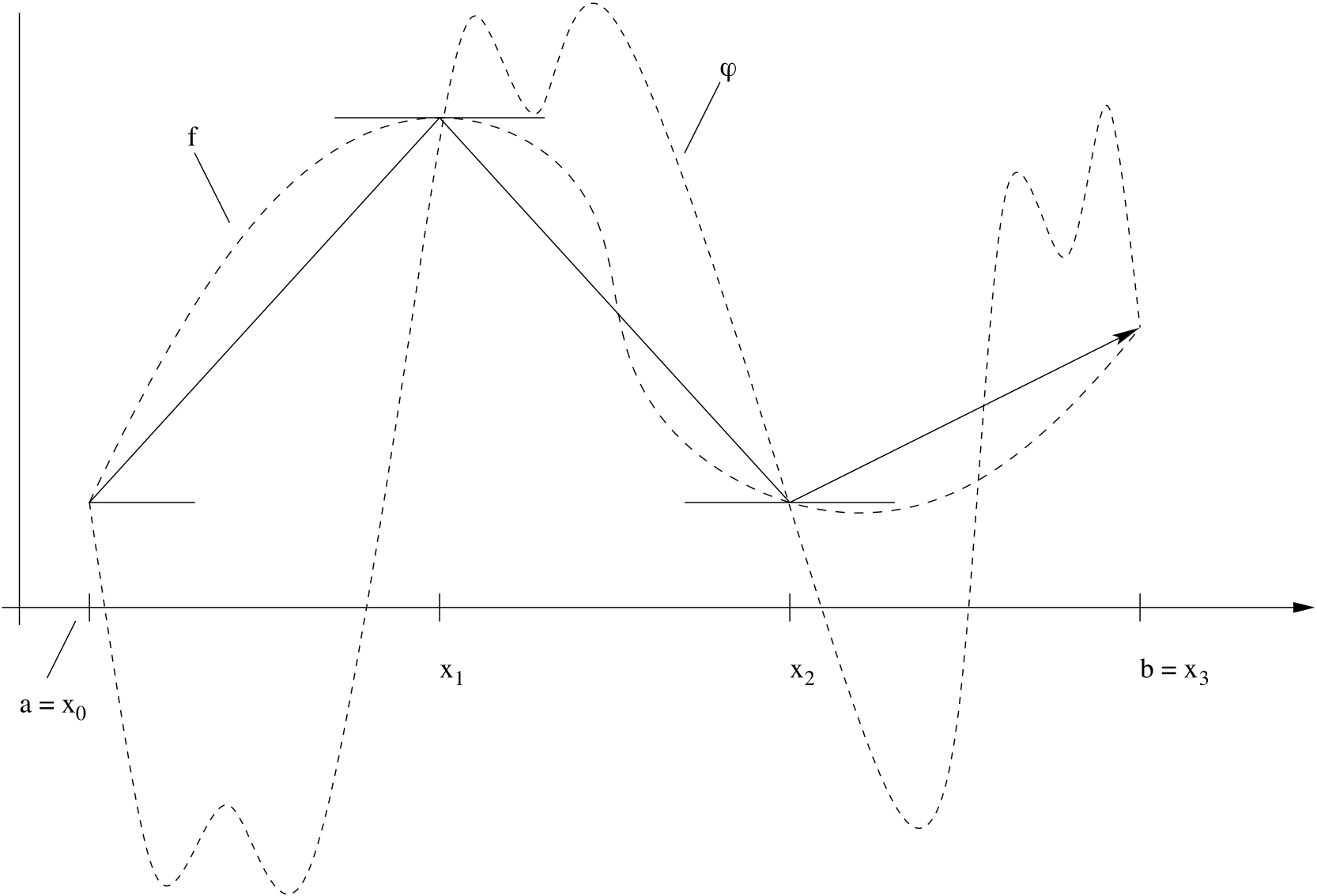

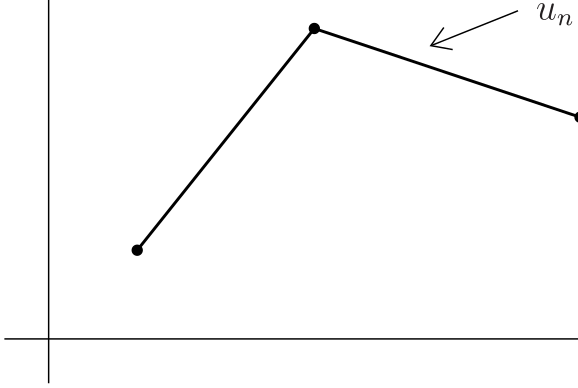

Some examples can be found in Figure 6.1.

From a general point of view, we are interested in a method which

has a small approximation error on our interval of interest \([a,b],\) i.e. we can control and bound the quantity \[\tag{6.2} \begin{aligned} \mathrm{err}_f(\varphi) = \sup_{x\in[a,b]} |f(x) - \varphi(x)| =: \|f-\varphi\|_\infty \end{aligned}\]

is robust in the sense that small changes in the input do lead to small changes in the output only, i.e. a bounded condition number of the mapping \(f\mapsto \varphi,\)

provides a low complexity of the respective algorithms for computing and evaluating \(\varphi.\)

The interpolation can be regarded as a “reconstruction problem”: given the \(n+1\) values \(f(x_0),\ldots,f(x_n)\) of a function \(f\) at the points \(x_0,\ldots, x_n,\) which form our mesh \(\Delta_n,\) \[\tag{6.3} \Delta_n \;=\; \left\{ x_0, \ldots, x_n \;|\; a \leq x_0 < x_1 < \cdots < x_n \leq b\right\} \subset [a,b]\, ,\] we aim to reconstruct \(f\) with the highest possible accuracy. Here, we only will consider continuous functions, i.e. functions which do not “jump” or do not have “holes” in their graph. For convenience, we will denote the set of all continuous and real-valued functions on the interval \([a,b]\) by \(C([a,b])\)

Our goal is to construct a function \(\varphi\in C([a,b]),\) which is intended to approximate \(f\) as well as possible. The minimal requirement we have is that \(\varphi\) fulfills the so-called interpolation conditions \[\tag{6.4} \varphi(x_i) = f(x_i)\, , \qquad i = 0, \ldots, n\, .\]

As can be seen in Figure 6.1, \(\varphi\) can be chosen in many different ways. Widely used methods for constructing approximations \(\varphi\) include

polynomial interpolation

piecewise polynomial interpolation

(B-)splines

trigonometric interpolation

These methods above have some common structure. The function \(\varphi\) should be “easy to handle” so that we can plot it, differentiate it, or integrate it without significant problems.

A typical choice is to use polynomials, i.e. to look for our interpolant \(\varphi\) in the space of all polynomials of degree \(n\): \[\varphi \in P^n([a,b]) =\{p \,|\, p\colon [a,b]\longrightarrow \mathbb{R},\, p(x) = \sum_{i=0}^n a_ix^i\,, \, a_0,\ldots,a_n\in\mathbb{R}\}\, .\] Here, we have decided to use \(P^n([a,b])\) as our “approximation space”. We also note that choices other than polynomials are possible, such as trigonometric functions, exponential functions, rational functions, etc. Here, we focus on polynomials as they are most widely used for building approximation spaces.

We note that from now on we will often write \(P^n\) instead of \(P^n([a,b])\) in order to simplify the notation.

We are especially interested in the question of whether for a given \(f\) there exists a unique \(p_n\in P^n\) which fulfills the interpolation conditions (6.4), \[p_n(x_i) \;=\; f(x_i),\qquad i = 0, \ldots, n\]

As pointed out above, the idea of polynomial interpolation is to construct the sought-after approximation \(\varphi\) as a polynomial of degree less or equal to \(n,\) i.e. we assume that \(\varphi\) has the form \[\tag{6.5} \varphi (x) = a_nx^n+\cdots + a_1 x + a_0 = \sum\limits_{i=0}^{n} a_ix^i\, .\] where \(a_0,\ldots,a_n \in \mathbb{R}\) are the coefficients of the polynomial. In Equation (6.5), \(\varphi\) is given with respect to the monomial basis \(\{1,x,x^2,x^3,\ldots,x^n\}.\) As we will see later, also different representations of \(\varphi,\) which do not use monomials, are possible. Thus, it is important to distinguish between the polynomial \(\varphi\) and its representations.

In \(P^n([a,b]),\) the interpolation problem reads:

Given \(f \in C([a,b]),\) find for a given grid \(\Delta_n\) a function \(p_n\in P^n\) with \[\tag{6.6} p_n(x_i) = f(x_i), \qquad i=0, \ldots, n\, .\]

We would like to understand whether the interpolant exists and is unique. We start with uniqueness.

If the interpolation problem (6.6) has a solution, it is unique.

Proof. Let \(p, q\in P^n\) be two interpolation polynomials with \(p(x_i)=q(x_i) = f(x_i)\) for \(i=0,\ldots, n.\) Then, \(p-q\) is a polynomial of degree less than or equal to \(n\) with the \(n+1\) zeros \(x_0,\ldots,x_n.\) Since a non-constant polynomial of degree \(n\) can have at most \(n\) zeros, \(p-q\) has to be the null polynomial.

We supposed that all \(x_i\) are distinct. Otherwise, we would have less than \(n+1\) zeros of \(p-q.\)

We will show the existence of the interpolation polynomial later using Lagrange polynomials.

Due to the uniqueness proved in 6.1, we can write the solution to the interpolation problem (6.6) as \(p_n=p(x_0, \ldots, x_n|f).\) As an additional simplification, we consider the affine transformation \[\eta=\eta(x)=a+x\cdot (b-a)\, .\] It translates and rescales the interval \([0,1]\) to \([a,b].\) Therefore, it suffices to consider (6.6) on the fixed interval \([0,1]\) from now on.

There are several ways to represent the polynomials in \(P^n.\) Two of the most common are

the monomes \(x^i,\; i=0, \ldots, n\) and

the Lagrangian polynomials \(L_k^n,\) sometimes called the nodal basis with \(L_i^n(x_j)=\delta_{ij}\) for \(x_j\in\Delta_n.\)

Here, we have used the Kronecker-\(\delta\) \[\tag{6.7} \begin{aligned} \delta_{ij} =\left\{\begin{aligned}1 &, \, i=j\\ 0 &,\, i\not= j\end{aligned}\right. \end{aligned}\] The Lagrangian basis functions can be written as \[\tag{6.8} L_i^n(x)\;=\;\prod_{\substack{k=0\\k \neq i}}^n\, \frac{x-x_k}{x_i-x_k},\qquad i=0, \ldots, n.\] In particular, we have that \[\tag{6.9} p_n(x) = p(x_0, \ldots, x_n|f) \;=\; \sum_{i=0}^n f(x_i)L_i^n(x)\, .\] Please note that in Equation (6.9) the values \(f(x_i)\) are the coefficients of \(p_n\) with respect to the Lagrangian basis.

We can define by means of the Euclidean scalar product \(\langle \cdot,\cdot \rangle\) a scalar product on \(P^n\) as follows: \[\tag{6.10} \langle p,q \rangle \;=\; \sum_{i=0}^n p(x_i)\cdot q(x_i)\, .\] Inserting \(p=L_k^n\) and \(q=L_l^n\) into Equation (6.10), we get \[\begin{aligned} \langle L_k, L_l\rangle \;&=\; \sum_{i=0}^n L_k(x_i)L_l(x_i)\\ &=\; \sum_{i=0}^n \delta_{ki}\delta_{li} \;=\; \delta_{kl}, \, \end{aligned}\] which implies that the Lagrange polynomials are orthogonal with respect to the scalar product (6.10).

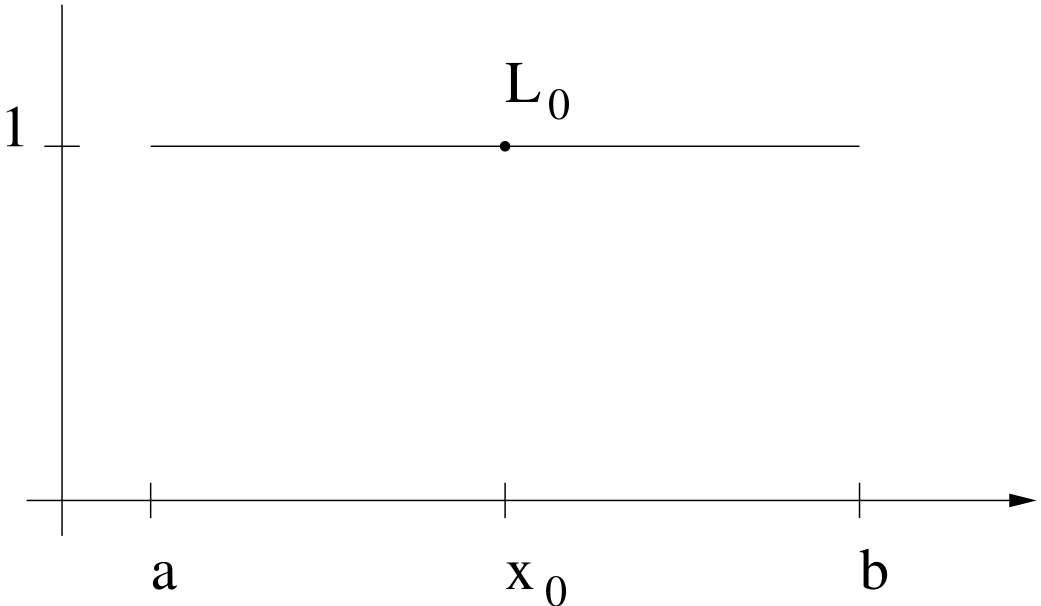

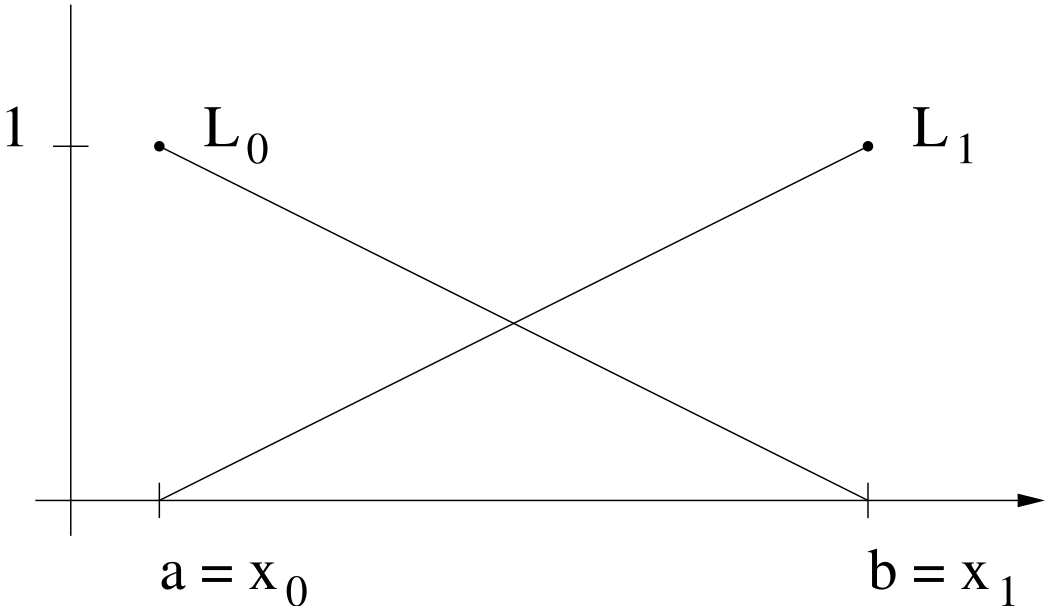

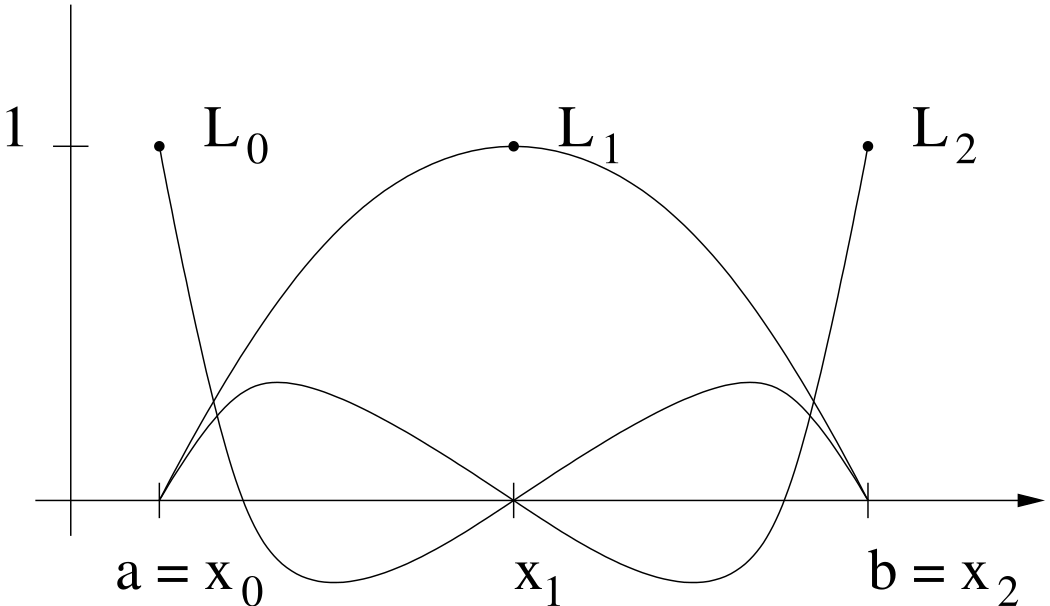

In Figure 6.2–Figure 6.4, the Lagrangian polynomials are plotted for \(n=0,1,2.\)

Computing the coefficients \(\{a_i\}\) of \(p_n\) with respect to the monomial basis leads to the following system of linear equations: \[\underbrace{\begin{pmatrix} 1 & x_0 & x_0^2 & \cdots & x_0^n\\ \vdots & \vdots & \vdots & & \vdots\\ 1 & x_n & x_n^2 & \cdots & x_n^n \end{pmatrix}}_{=:\;{\mathcal V}_n} \begin{pmatrix} a_0\\ \vdots\\ a_n \end{pmatrix} \;=\; \begin{pmatrix} f(x_0)\\ \vdots \\ f(x_n) \end{pmatrix} \tag{6.11}\] The matrix \({\mathcal V}_n\) is called the Vandermonde Matrix . Its determinant can be computed as follows: \[\det({\mathcal V}_n) \;=\; \prod_{i=0}^n \, \prod_{j=i+1}^n(x_i-x_j).\] It is different from \(0\) if all \(x_i\) are distinct. For large \(n,\) the Vandermonde matrix \({\mathcal V}_n\) becomes almost singular. This means that we have to solve a badly-conditioned system. Furthermore, the solution of this system also might require a substantial amount of time, i.e. it is of order \(O(n^3),\) as the matrix \({\mathcal V}_n\) is dense. We want to avoid this and, therefore, look for alternative ways to compute and evaluate the interpolation polynomial.

The particular form of the Lagrange polynomials allows us to provide an existence result for our interpolation problem.

The interpolation problem (6.6) has a solution in \(P^n.\)

Proof. Set \[\varphi(x) = \sum_{i=0}^n f(x_i) L_i^n (x)\, .\] We have \(\varphi\in P^n.\) Furthermore, we have for \(i=0,\ldots,n\) that \[\varphi(x_j) = \sum_{i=0}^n f(x_i) L_i^n (x_j) = \sum_{i=0}^n f(x_i) \delta_{ij} = f(x_j)\, .\] Thus, \(\varphi\) fulfills the interpolation conditions (6.1).

Together with Theorem 6.2, we therefore have shown:

The interpolation problem (6.6) has a unique solution in \(P^n.\)

We note that also other interpolants can be constructed. Our existence and uniqueness result has been shown only for polynomials of order \(n,\) i.e. for \(P^n\) as the approximation space. Furthermore, the interpolation polynomial in general will depend on the chosen mesh \(\Delta_n.\)

6.2 Properties of the Interpolation Process

Since the interpolation problem (6.1) has a unique solution in \(P^n,\) it is justified to think about interpolation as a mapping from “data space” to “polynomial space”. As we can see from (6.1), the interpolation polynomial is constructed by taking the values of \(f\) at the mesh points \(x_0,\ldots,x_n\) and then building the interpolation polynomial using (6.9). Thus, as “data space” we can identify the space of continuous functions \(C([0,1]),\) and the function \(f\in C([0,1])\) is our input. The output of our interpolation mapping then is simply the interpolation polynomial \(p(x_0,\ldots,x_n|f).\)

The above considerations motivate us to make the following definition.

We call the mapping \[\tag{6.12} \begin{aligned} I _n:\; C([0,1]) \;&\longrightarrow\; P^n([0,1])\\ f \;&\longmapsto\; p_n = p(x_0,\ldots, x_n|f) \end{aligned}\] interpolation operator and we write \[I_n(f) = p(x_0,\ldots, x_n|f) = p_n\, .\]

We would like to collect some properties of the interpolation operator or, in other words, we would like to understand the process of interpolation better.

Our first question is connected to the question of what will happen if we multiply the function \(f\) by a scalar \(\alpha \in\mathbb{R},\) i.e. how are the two polynomials \(p(x_0,\ldots, x_n|f)=I_n(f)\) and \(p(x_0,\ldots, x_n|\alpha f)=I_n(\alpha f).\) related? We answer this question by computing \[\begin{aligned} I_n(\alpha f) & = p(x_0,\ldots, x_n|\alpha f) \\ & = \sum_{i=0}^n \alpha \cdot f(x_i) L_i^n\\ & = \alpha \sum_{i=0}^n f(x_i) L_i^n\\ &= \alpha \cdot p(x_0,\ldots, x_n|f) \\ &= \alpha \cdot I_n(f)\, . \end{aligned}\] In other words: if we multiply \(f\) by a scalar \(\alpha,\) all we need to do is to multiply the interpolation polynomial by the same \(\alpha\) in order to interpolate.

We now investigate what happens if we take the sum of two functions, say \(f,g\in C([0,1]).\) \[\begin{aligned} I_n(f + g) & = p(x_0,\ldots, x_n|f + g) \\ & = \sum_{i=0}^n (f(x_i)+g(x_i)) L_i^n\\ & = \sum_{i=0}^n f(x_i) L_i^n + \sum_{i=0}^n g(x_i) L_i^n\\ &= p(x_0,\ldots, x_n|f) + p(x_0,\ldots, x_n|g) \\ &= I_n(f) + I_n(g)\, . \end{aligned}\] Thus, we can use the sum of the interpolants to interpolate a sum of two functions. We collect the properties which we have derived above.

Let \(f,g\in C([0,1]),\) \(\alpha\in\mathbb{R}\) and \(I_n\) the interpolation operator from (6.12). Then, it holds \[\begin{aligned} I_n(\alpha f) &= \alpha I_n(f)\\ I_n(f+g) &= I_n(f) + I_n(g)\, . \end{aligned}\] To conclude our investigation of the interpolation operator, we eventually investigate what happens if we interpolate the same function twice. To this end, let \(I_n(f)=p(x_0,\ldots,x_n|f)\) be given. We now interpolate again, i.e. we compute \[\tag{6.13} \begin{aligned} I_n(I_n(f)) &= I_n(p(x_0,\ldots,x_n|f)) \\ \nonumber &= \sum_{i=0}^n p(x_0,\ldots,x_n|f) (x_i) L_i^n\\ \nonumber &= \sum_{i=0}^n f(x_i) L_i^n\\ \nonumber &= I_n(f)\, . \end{aligned}\] In other words, \(I^2_n(f)=I_n(I_n(f))=I_n(f).\)

6.3 Condition of Polynomial Interpolation

We now would like to have a closer look at the condition number of the interpolation problem.

For simplicity, we consider first a perturbation in a single datum \(f(x_{i_0}),\) where the index \(i_0\in \{0,\ldots,n\}\) is arbitrary but fixed. To this end, we take a function \(\tilde{f}\in C([a,b])\) with \(f(x_i)=\tilde{f}(x_i)\) for \(i\in \{0,\ldots,n\}\setminus\{i_0\}\) and \(\tilde{f}(x_{i_0}) = f(x_{i_0}) +\delta_{i_0}\) for a small perturbation \(\delta_{i_0}.\) This leads for \(x\in[0,1]\) to \[\begin{aligned} |I_n(f)(x)-I_n(\tilde{f})(x)| &= |p_n(f)(x)-p_n(\tilde{f})(x)|\\ &= \left| \sum_{i=0}^n f(x_i) L_i^n(x) - \sum_{i=0}^n \tilde{f}(x_i) L_i^n(x)\right|\\ &= \left| \sum_{i=0}^n (f(x_i) - \tilde{f}(x_i)) L_i^n(x)\right|\\ &= | (f(x_{i_0}) - \tilde{f}(x_{i_0})) L_{i_0}^n(x)|\\ &= | f(x_{i_0}) - \tilde{f}(x_{i_0})|\cdot |L_{i_0}^n(x)|\, .\\ \end{aligned}\] Taking the supremum for \(x\in[0,1],\) we see \[\tag{6.14} \begin{aligned} \sup_{x\in [0,1]} |p_n(f)(x)-p_n(\tilde{f})(x)| &= \sup_{x\in [0,1]} |L_{i_0}^n(x)| \cdot| f(x_{i_0}) - \tilde{f}(x_{i_0})|\, . \end{aligned}\] This estimate, which is done for a single component only, shows that the absolute maximal values of the Lagrange polynomial \(L_{i_0}^n\) \[\tag{6.15} \begin{aligned} \lambda_{i_0} = \sup_{x\in [0,1]} |L_{i_0}^n(x)| \end{aligned}\] is the amplification factor for perturbations in datum \(i_0.\)

The intuition emerging from this estimate is that a perturbation of the data point \(y_{i_0}=f(x_{i_0})\) can be amplified by the factor \(\lambda_{i_0}.\) In other words, the largest (absolute) value of the Lagrange polynomial \(L^n_{i_0}\) is also the amplification factor for perturbations in datum \(i_0.\) Thus, the more the Lagrange polynomials oscillate, the larger the condition number of the interpolation process.

We refer to Voluntary Material — Condition Number and Chebyshev Nodes which illustrates that for increasing \(n,\) the Lagrange polynomials show stronger and stronger oscillations.

As it turns out, the supremum in (6.15) depends on the choice of the nodes \(x_n.\) Desirably low values are obtained for the Chebychev nodes, which are defined on the interval \([-1,1]\) by \[x_i \;=\; \cos\left(\frac{2i+1}{2n+2}\pi\right),\quad i=0, \ldots, n\]

6.4 Divided Differences

We have seen above that we can represent the interpolation polynomial using either the monomial basis or the Lagrange basis. For theoretical purposes, the Lagrange basis has proven convenient. Existence of the interpolation polynomial as well as analysis of the condition of polynomial interpolation was done using the Lagrange polynomials.

We now look at polynomial interpolation from a more practical point of view. If we represent the interpolation polynomial \(p_n=p_n(x_0,\ldots,x_n|f)\) in the Lagrange basis, as is done in (6.9), we can readily take the values \(f(x_i),\) \(i=0,\ldots,n\) as coefficients. However, to evaluate \(p_n\) at some point \(x,\) we have to evaluate the, in practice, less inconvenient Lagrangian polynomials. We, therefore, try to find an alternative representation of \(p_n.\)

We start with a technical but useful property of interpolation polynomials.

Let the points \(\Delta_n=\{x_0<\cdots< x_n\}\) be given. Then it holds for the interpolation polynomial \(p = p(x_0, \ldots, x_n\, | f)\) that \[\tag{6.16} \begin{aligned} p(x)&=\frac{(x_i-x) \, p(\Delta_n \setminus \{x_i\}\, |\, f)(x) - (x_j-x) \, p(\Delta_n \setminus \{x_j\} \, |\, f)(x)}{x_i-x_j}\\ \nonumber &=\frac{(x_i-x) \, p(x_0 , \ldots ,\hat x_i , \ldots ,x_n\, |\, f)(x) - (x_j-x) \, p(x_0 , \ldots ,\hat x_j , \ldots ,x_n\, |\, f)(x)}{x_i-x_j} \end{aligned}\] The notation \(\hat x_i\) means that the point \(x_i\) is removed (“nimmt seinen Hut”).

Proof. We exploit the interpolation conditions. First, we insert \(x=x_i\) into (6.16) and get \[\begin{aligned} p(x_i) &= \frac{(x_i-x_i) \, p(\Delta_n \setminus \{x_i\}\, |\, f) (x_i) - (x_j-x_i) \, p(\Delta_n \setminus \{x_j\}\, | \, f)(x_i)}{x_i-x_j}\\ &= \frac{0 - (x_j-x_i) \, f(x_i)}{x_i-x_j}\\ &= f(x_i)\, . \end{aligned}\] A similar calculation leads to \(p(x_j)=f(x_j).\) For \(k\not =i,j\) we have \[\begin{aligned} p(x_k) &= \frac{(x_i-x_k) \, p(\Delta_n \setminus \{x_i\}\, | \, f) (x_k) - (x_j-x_k) \, p(\Delta_n \setminus \{x_j\}\, | \, f)(x_k)}{x_i-x_j}\\ &= \frac{(x_i-x_k) f(x_k) - (x_j-x_k) f (x_k)}{x_i-x_j}\\ &= \frac{(x_i-x_j) f(x_k) - (x_k-x_k) f (x_k)}{x_i-x_j}\\ &= f(x_k)\, . \end{aligned}\]

We now define the Newton basis, which will help us to compute the interpolation polynomial in a more efficient and flexible way.

We call for \(i=0,\ldots,n\) the polynomial \[\omega_i(x)=\prod_{k=0}^{i-1}(x-x_k)\, ,\quad \omega_k\in P^i,\] Newton polynomial and we call \(\{\omega_i\}_{i=0,\ldots,n}\) the Newton-basis of \(P^n\)

The leading coefficient \(a_n\) of the interpolation polynomial \[p(\Delta_n\, | f\,)(x) = a_n x^n + \cdots + a_1 x + a_0\] of \(f\) for the nodes \(\Delta_n=\{x_0<\cdots< x_n\}\) is called \(n\)-th divided difference of \(f\) to \(x_0 , \ldots ,x_n.\) We introduce the notation \[[x_0 , \ldots ,x_n]f:=a_n\, .\]

Let \(f\in C ^n\) and \(\Delta_n=\{x_0<\cdots< x_n\}.\) Then the interpolation polynomial \(p(\Delta_n\, |\, f)\) of \(f\) for the points \(\Delta_n\) is given by \[p:=\sum_{i=0}^n \, [x_0,\ldots,x_i]f\cdot \omega_i\]

Proof. The proof is carried out by induction in \(n\):

\(n=0\): Clear.

\(n>0\): Let \(p_{n-1} := p(x_0 , \ldots ,x_{n-1}\, | \, f) = \sum_{i=0}^{n-1}[x_0 , \ldots ,x_i]f\cdot \omega_i.\)

Then for \(p_n = p(x_0 , \ldots ,x_n\, |\, f)\) we have \[\begin{aligned}

p_n(x)&=[x_0 , \ldots ,x_n]f \cdot x^n+ \cdots + a_0\\

&=[x_0 , \ldots ,x_n]f\cdot \omega_n(x) + Q_{n-1}(x)

\end{aligned}\] with \(Q_{n-1}\in P_{n-1}.\) For the polynomial \[Q_{n-1}=p_n-[x_0,\ldots,x_n]f\cdot \omega_n\] we see that, by definition of the Newton polynomials, the polynomial \(Q_{n-1}\) fulfills the interpolation conditions in \(x_0 , \ldots ,x_{n-1},\) i.e. for \(j=1,\ldots, n-1\) below: \[\begin{aligned}

Q_{n-1}(x_j)

&= p_n(x_j)-[x_0,\ldots,x_n]f\cdot \omega_n (x_j)\\

&= f(x_j) - [x_0,\ldots,x_n]f\cdot \prod_{i=0}^{n-1}\underbrace{(x_j-x_i)}_{=0\text{ for } j=i}\\

&= f(x_j)\, .

\end{aligned}\] Thus, by the uniqueness of the interpolating polynomial and by our assumption, we get \[\begin{aligned}

Q_{n-1} &= p_{n-1}\\

&= \sum_{i=0}^{n-1}[x_0 , \ldots ,x_i]f\cdot \omega_i.

\end{aligned}\]

For the divided difference \([x_0 , \ldots ,x_n]f\) we have for \(f\in C ^n\):

\([x_0 , \ldots ,x_n]p=0\) for all \(p\in P_{n-1}\)

If \(x_0=x_1=\cdots=x_n,\) we have \[[x_0 , \ldots ,x_n]f=\frac{f^{(n)}(x_0)}{n!}\]

For \(x_0<\cdots<x_n\) we have the recursion formula \[[x_0 , \ldots ,x_n]f=\frac{[x_0 , \ldots ,\hat x_i , \ldots ,x_n]f-[x_0 , \ldots ,\hat x_j , \ldots ,x_n]f}{x_j-x_i}\]

Proof.

The \(n\)-th coefficient of any \(p\in P^{n-1}\) vanishes, which implies i).

Will be shown in a later semester using Taylor expansion.

Follows from Lemma 6.4 together with the uniqueness of the leading coefficient.

The above recursion formula can be visualized as follows: \[\begin{matrix} f(x_0)=[x_0]f \\ & \searrow \\ f(x_1)=[x_1]f & \rightarrow & [x_0,x_1]f \\ \vdots & & & \ddots \\ f(x_{n-1})=[x_{n-1}]f & \rightarrow & \cdots & \rightarrow & [x_0,\ldots,x_{n-1}]f \\ & & & & & \searrow \\ f(x_n)=[x_n]f & \rightarrow & \cdots & \rightarrow & [x_1,\ldots,x_n]f & \rightarrow & [x_0,\ldots,x_n]f \end{matrix}\]

We can compute the interpolation polynomial for the following values

| \(t_i\) | \(0\) | \(1\) | \(2\) | \(3\) |

|---|---|---|---|---|

| \(f_i\) | \(1\) | \(2\) | \(0\) | \(1\) |

using divided differences as follows

| \([t_0]f = 1\) | |||

| \([t_1]f = 2\) | \([t_0,t_1]f = 1\) | ||

| \([t_2]f = 0\) | \([t_1,t_2]f = -2\) | \([t_0,t_1,t_2]f = -3/2\) | |

| \([t_3]f = 1\) | \([t_2,t_3]f = 1\) | \([t_1,t_2,t_3]f = 3/2\) | \([t_0,t_1,t_2,t_3]f = 1,\) |

also \[(\alpha_0,\alpha_1,\alpha_2,\alpha_3)=(1,1,-3/2,1).\] Consequently, the interpolation polynomial is \[\begin{aligned} P(t) &= 1+1(t-0)+(-3/2)(t-0)(t-1)+1(t-0)(t-1)(t-2)\\ &= t^3-4.5t^2+4.5t+1. \end{aligned}\]

Using divided differences, we can now easily evaluate the interpolation polynomial:

\[\begin{aligned}

p(\Delta_n\, |\, f)(x) &= p_n(x)=\sum_{i=0}^n\overbrace{[x_0 , \ldots ,x_n]f}^{=:a_i}\prod_{k=0}^{i-1}(x-x_k)\\

&= a_0+\sum_{i=1}^n a_i\prod_{k=0}^{i-1}(x-x_k)

\end{aligned}\] By factoring out, we get: \[p_n(x)=a_0+(x-x_0)(a_1+(x-x_1)(a_2+(\cdots+(x-x_{n-1})a_n)\cdots)\] which leads us to the Horner–scheme, which we had already investigated.

(Horner-scheme for Newton polynomials)

| Initialisation | : | \(S_n=a_n\) |

| Recursion | : | for \(k=n-1,\ldots,0\) do \(S_k=S_{k+1}\cdot (x-x_k)+a_k\) |

| Result | : | \(p_n(x)=S_0\) |

Usually, first, the coefficients are computed using the recursion formula, and then the polynomial is evaluated using the Horner scheme.

The Horner–scheme requires only \({\mathcal O}(n)\) operations.

The computation of the divided differences \(a_i,~i=0,\ldots,n\) requires \({\mathcal O}(n^2)\) operations

6.5 The Algorithm of Aitken-Neville

Sometimes, we only want to evaluate the interpolation polynomial \(p = p(f|\Delta_n)\) at a small number of points. There is an efficient way of doing exactly this without having to actually compute the polynomial. We also save the actual evaluation. The value of \(p\) at a given \(x\in[a,b]\) can be computed recursively. To this end, we first state a recursive formula for this value and later propose an easy scheme to actually compute it.

For the interpolation polynomial \(p = p(\Delta_n|f)\) there is the recursive formula \[p(x) \;=\; \underbrace{\frac{(x_0-x) p(x_1, \ldots, x_n|f) (x) - (x_n-x) p(x_0, \ldots, x_{n-1}|f)(x)}{x_0-x_n}}_{=:\alpha(x)}\]

Proof. We have \(\alpha\in P^n\) and, with \(f_i=f(x_i),\) we can write \[\alpha(x_i) \;=\; \frac{(x_0-x_i) f_i - (x_n-x_i) f_i}{x_0-x_n} \;=\; f_i, \qquad i = 1, \ldots, n-1.\] It is obvious that \(\alpha(x_0)=f_0\) and \(\alpha(x_n) = f_n.\) From the uniqueness of the interpolation polynomial (Theorem 6.1), the conjecture follows.

For the sake of readability, we write, for \(i<k\): \[P_{ik} \;=\; p(x_{i-k}, \ldots, x_i|f)(x)\]

Wanting to compute the value of our interpolation polynomial \(p(x_0,\ldots, x_n|f)\) at the point \(x,\) we can now recursively compute the value \(p(x_0, \ldots, x_n|f)(x) = P_{nn}\) using the algorithm of Aitken-Neville:

\[\begin{matrix} P_{0,0} \\ & \searrow \\ P_{1,0} & \rightarrow & P_{1,1} \\ \vdots & & & \ddots \\ P_{n-1,0} & \rightarrow & \cdots & \rightarrow & P_{n-1,n-1} \\ & & & & & \searrow \\ P_{n,0} & \rightarrow & \cdots & \rightarrow & P_{n,n-1} & \rightarrow & P_{n,n} \end{matrix}\]

This scheme is evaluated according to the following rules: \[\begin{aligned} P_{i,0} \;&=\; f_i,\qquad i=0, \ldots, n\\ P_{i,k} \;&=\; P_{i,k-1} + \frac{x-x_i}{x_i-x_{i-k}} (P_{i,k-1}-P_{i-1,k-1}), \qquad i \geq k. \end{aligned}\]

The algorithm of Aitken-Neville has the following properties:

For the computation of \(P_{n,n}=p(x)\) using the algorithm of Aitken-Neville we need \(\frac{n(n+1)}2\) floating point operations

Adding an additional node \(x_{n+1}\in(a,b)\) requires \(n+1\) operations

Each new row corresponds to increasing the order of the approximating polynomial by 1

The Aitken-Neville algorithm is closely related to extrapolation methods for ordinary differential equations

In case we have \(f(x_i)\approx f(x_{i+1})\) or \(x_i\approx x_{i+1}\) for some \(i\in\{0, \ldots, n-1\},\) cancellation of leading digits may occur.

6.6 Interpolation Error

Let \(f\) be \(n+1\)-times continuously differentiable on the interval \([a,b],\) i.e. let \(f\in C^{n+1}[a,b].\) Then, for every \(x\in[a,b]\) there exists a \(\xi(x)\in[a,b]\) such that \[f(x)-p_n(x)=\frac{f^{(n+1)}(\xi(x))}{(n+1)!}\omega_{n+1}(x)\] holds.

We will prove this formula later.

6.7 Piecewise linear Interpolation

We choose \[U_n = S_n = \{v\, | \, v \in C[a,b], \, v|_{[x_{k-1},x_k]} = p_k, \, p_k \in P_1, \, k = 1 , \ldots ,n\}\] the space of continuous and piecewise linear functions.

The interpolation problem: Find \[u_n \in S_n\colon \quad u_n(x_k)=f(x_k), \, k=0 , \ldots ,n\] has the solution \[u_n=\sum_{k=0}^nf(x_k)\phi_k \text{ with } \phi_k\in S_n,~\phi_k(x_l)=\delta_{kl} \text{ (nodal basis)}\]

Let \(a=x_0,\) \(x_n=b\) and \(f\in C^2[a,b].\)

Then, we have \[\sup_{x\in[a,b]}|f(x)-u_n(x)|\leq h^2 \frac{\sup_{x\in[a,b]} |f^{\prime\prime}(x)|}{8}\, ,\] with \[h= \max_{k \, = \, 1 , \ldots ,n}h_k, \, h_k=x_k-x_{k-1}\, .\]

Proof. The error estimate for polynomial interpolation implies locally, i.e. for \(k=1,\ldots,n\) \[\begin{aligned}

\max_{x\in [x_{k-1},x_k]}|f(x)-u_n(x)|&\leq&\max_{x\in [x_{k-1},x_k]}

\frac{|f^{\prime\prime}(x)|}2\overbrace{\max_{x\in [x_{k-1},x_k]}|(x-x_{k-1})(x-x_k)|}^{=h^2/4}\\

&=&h_k^2\max_{x\in [x_{k-1},x_k]}

\frac{|f^{\prime\prime}(x)|}8,

\end{aligned}\] since with \(x_1=x_k,\) \(x_0=x_{k-1}\) we have \[\begin{aligned}

& ((x-x_1)(x-x_0))^\prime \\

= & 1(x-x_0)+1(x-x_1)\\

= & x-x_0+x-x_1\\

= & 2x-x_0-x_1\\

= & 0\\

\Leftrightarrow & x = \frac{x_0+x_1}{2} = x_0 + \frac{h}{2}\\

\end{aligned}\]

\(x_1 = x_0 + h\): \[\begin{aligned}

\max&=&|\left(x_0+\frac{h}2-x_0-h\right)\left(x_0+\frac{h}2-x_0\right)|\\

&=&|\left(-\frac{h}2\right)\left(\frac{h}2\right)|=\frac{h^2}4

\end{aligned}\]

Disdavantage

The interpolant \(u_n\) has "kinks" and is therefore unsuitable for many applications (computer graphics, etc.).