Introduction

Optimization and machine learning are distinct topics, and one could easily dedicate entire separate courses to each of them. There is however a strong connection between the two: the learning step of most machine learning algorithms involves solving an optimization problem. Optimization problems also arise in science, engineering, economics, applied mathematics, etc.

Optimization

The problem \[\text{``What is the maximal value of }\:f(x)=x^{2}-x^{4}\text{?''}\tag{}\] is a basic example of an optimization problem. This very simple problem can be solved by hand, or “visually” using the following graph.

A more complicated type of problem which we will study is the optimization of functions of several variables, which are subject to constraints. We will also consider large scale optimization problems over say millions of variables of the type \[\text{``What is the maximal value of }f(x_{1},\ldots,x_{10^{6}})\text{?''}\] Unless \(f\) has some special structure that can be exploited, such a problem can not be solved by hand. We will study numerical optimization algorithms for such such problems.

Machine learning

Machine learning is about algorithms that can be taught to carry out tasks, instead of being explicitly programmed with the exact detailed steps needed to carry out each possible variation of the task, as in traditional programming. Many such tasks can be formulated in terms of producing a desired output when presented with an input, to be learned from a dataset of input-output pairs created by humans. A very simple machine learning algorithm for this type of task is linear regression. For instance, if one has a dataset of floor area of apartments (input) together with their rent (output) for a certain neighborhood, one can find the linear function that best fits the data, and use it to predict the rent of future apartments in the neighborhood. See Figure 2.

Despite its simplicity linear regression is indeed a machine learning algorithm. It can also be considered a statistical tool - indeed machine learning and statistics have a lot in common.

Finding the best fitting line involves an optimization problem: an appropriate function \(f(a,b)\) captures the quality of the fit for a line with slope \(a\) and intercept \(b,\) and one optimizes it to find the line of best fit. For linear regression this optimization problem can be solved by hand.

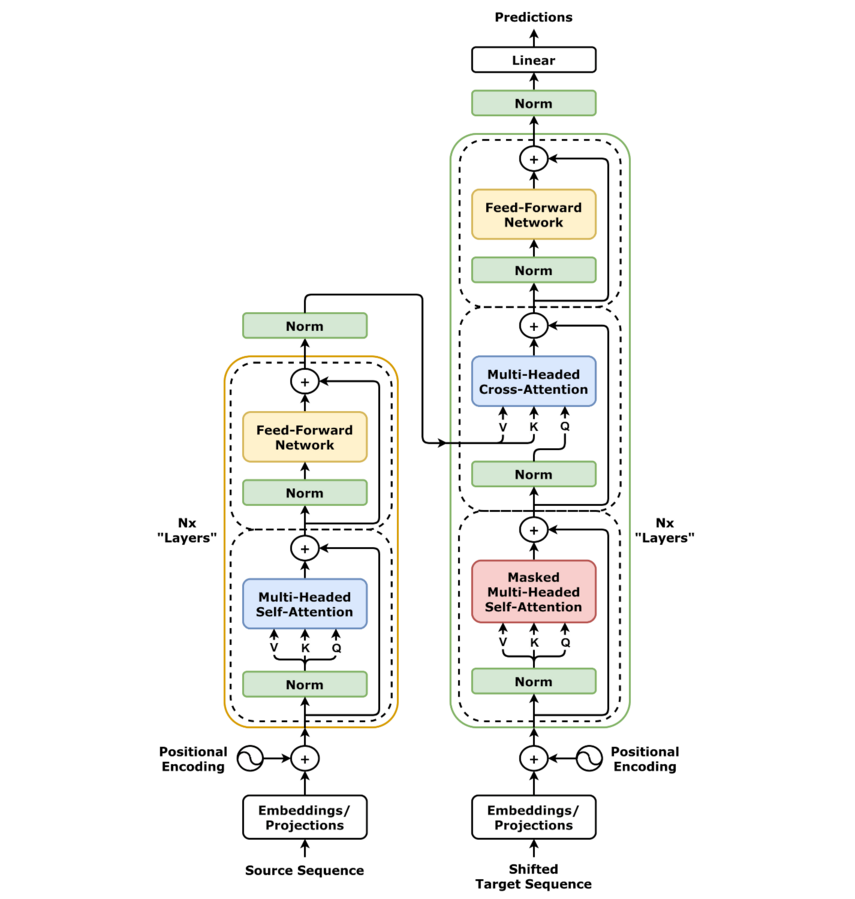

Artificial neural networks (ANN) - such as Large Language Models (LLM) - are more sophisticated machine learning algorithms, many of which are however essentially of the same type as linear regression. Their input and outputs can be images, text, sound, etc, and their output is produced from a sophisticated transformation of the input whose details are specified by parameters - rather than just the two parameters \(a,b\) of the linear regression above, these can number in the hundreds, thousands, millions or billions. The quality of the output produced on a given human prepared dataset is measured by a function \(f(x_{1},\ldots,x_{\#\text{param}}),\) and the ANN is “taught” by using a numerical optimization algorithm to optimize \(f.\)

Architecture

Credit: https://commons.wikimedia.org/wiki/File:Transformer,_full_architecture.png

\[\text{``Minimize }f(x_{1},\ldots,x_{\text{\#params}})\text{''}\]

“Learning” amounts to numerically optimizing a function \(f\) measuring the quality of the ANNs prediction on the dataset for given parameters \(x_{1},\ldots,x_{\text{\#params}}.\)

Optimization is an import aspect of machine learning, but ultimately it is only a means to the end of generalization. A machine learning algorithm generalizes if it produces “good” output for new inputs that are not in the dataset. Given how we expect rent to relate to floor area, the linear regression shown in Figure 2 is likely to generalize well. But a machine learning algorithm based on polynomial interpolation applied to the same task dataset produces the prediction curve in the following figure.

This algorithm predicts wildly different rents for apartments of similar size, and even predicts negative rents for some sizes. For most apartment sizes not in the dataset the prediction is terrible, despite the prediction curve fitting the dataset perfectly. This is an example of poor generalization (the algorithm that produced the prediction in Figure 4 doesn’t always generalize poorly, but in this situation it does). The goal of machine learning is to find an algorithm that generalizes well.

The first part of the course deals with optimization. Given a function \(f\) to optimize, the basic goal is to find the maximum or minimum of the function. You should already be familiar with the basic technique for solving such problems by hand: solving the critical point equation \(\nabla f=0.\) In the first chapter we will recall this technique, and several concepts from the courses Analysis I & II (formerly Calculus I & II) that are relevant to optimization (such as convexity).

In the second chapter we turn to constrained optimization, where one seeks to optimize the function \(f(x),\) but only among values of \(x\) that satisfy some set of constraints. After that we will study numerical optimization algorithms, which can be used for problems that are too large to solve by hand.

More precisely, this course is concerned with continuous optimization, that is the optimization of functions of continuous variables \(x\) (i.e. taking value in some interval of \(\mathbb{R}\)). By contrast, discrete optimization deals with the optimization of functions of variables that take a discrete set of values. The theory of discrete optimization is quite different from that of continuous optimization, and it is beyond the scope of this course.

0.1 Fundamentals

Although time-consuming, this is in fact the best way to study a mathematical text whether the proofs are new to you or not.

0.1.1 Global and local extrema of functions

Recall the concept of infimum and supremum of a subset of \(\mathbb{R}\): for instance \[\inf\:(-1,1)=\inf\:[-1,\infty)=-1\qquad\inf\:\mathbb{Z}=-\infty.\] In general the infimum of a set \(A\subset\mathbb{R}\) is the largest \(x\in\mathbb{R}\) s.t. \(a\ge x\) for all \(a\in A,\) or \(-\infty\) if no such \(x\) exists; the supremum is defined analogously. From this we get the concept of infimum and supremum of a function.

Let \(D\) be a set and \({f:D\to\mathbb{R}}\) a function with domain \(D\) and image \(f(D).\)

[Infimum]The infimum of \(f\) is \[\inf_{x\in D}f(x):=\inf f(D)\in\mathbb{R}\cup\{-\infty\}.\]

[Supremum]The supremum of \(f\) is \[\sup_{x\in D}f(x):=\sup f(D)\in\mathbb{R}\cup\{\infty\}.\]

Optimization problems are about finding the infimum or supremum of functions. Next recall the related concept of global minimum/maximum.

Let \(D\) be a set, \(f:D\to\mathbb{R}\) a function and \(x\in D\) a point.

[Glob. min.]The point \(x\) is a global minimizer or global minimum point if \[f(x)\le f(y)\quad\forall y\in D.\] We denote the set of all global minimizers by \(\mathrm{argmin}f\subset D.\) If a global minimizer \(x_{*}\in\mathrm{argmin}f\) exists we call \(f(x_{*})\) the global minimum value of the function and write \[\min_{y\in D}f(y):=f(x_{*}).\]

[Glob. max.]The point \(x\) is a global maximizer or global maximal point if \[f(x)\ge f(y)\quad\forall y\in D.\] We denote the set of all global maximizers by \(\mathrm{argmax}f\subset D.\) If a global maximizer \(x_{*}\in\mathrm{argmax}f\) exists we call \(f(x_{*})\) the global maximum value of the function and write \[\max_{y\in D}f(y):=f(x_{*}).\]

[Glob. extremum]If \(x\) is a global minimizer or global maximizer we call it a global extremum.

When it is clear from context what is meant, global minimum can refer to either the global minimum point or global minimum value, and similarly for global maximum.

A function always has an infimum and supremum (possibly \(\pm\infty\)), but not always a global maximum and minimum (point or value). If global minimizers resp. maximizers exist, then their value coincide with the infimum resp. supremum. That is, \(\inf_{x\in D}f(x)\) and \(\sup_{x\in D}f(x)\) are always defined, but \(\min_{x\in D}f(x)\) and \(\max_{x\in D}f(x)\) are not always defined - when \(\min_{x\in D}f(x)\) is defined then \(\inf_{x\in D}f=\min_{x\in D}f(x),\) and similarly for \(\max_{x\in D}f(x).\) The number \(|\mathrm{argmin}f|\) of global minimizers can be zero, one, \(\ge2\) or even infinite, as can the number \(|\mathrm{argmax}f|\) of global maximizers. See Figure 1.1 for some examples for functions with \(D\subset\mathbb{R}.\)

Next we recall the concept of local minimum/maximum.

Let \(D\) be a set and \(\rho\) a metric on \(D.\) Let \(f:D\to\mathbb{R}\) be a function and \(x\in D\) a point.

[Loc. min.] The point \(x\) is called a local minimum if there is a \(\delta>0\) s.t. \(f(x)\le f(y)\) for all \(y\in D\) with \(\rho(x,y)\le\delta.\) It is a strict local minimum if there is a \(\delta>0\) s.t. \(f(x)<f(y)\) for all \(y\in D\setminus\{x\}\) with \(\rho(x,y)\le\delta\) .

[Loc. max.] A point \(x\in D\) is called a local maximum if there is a \(\delta>0\) s.t. \(f(x)\ge f(y)\) for all \(y\in D\) s.t. \(\rho(x,y)\le\delta.\) It is a strict local maximum if there is a \(\delta>0\) s.t. \(f(x)>f(y)\) for all \(y\in D\backslash\{x\}\) s.t. \(\rho(x,y)\le\delta.\)

[Loc. extremum] If a point \(x\in D\) is a (strict) local minimum or maximum we call it a (strict) local extremum.

1.4. Though the definition is stated at the level of generality of metric spaces, the course will only deal with functions with domain \(D\subset\mathbb{R}^{d},d\ge1,\) which are metric spaces with the usual Euclidean distance \(\rho(x,y)=|x-y|.\) Then e.g. the condition for \(x\) to be a local minimizer is that there exists a \(\delta>0\) s.t. \(f(x)\le f(y)\) for all \(y\in D\) with \(|x-y|\le\delta.\)

Obviously, every global extremum is also a local extremum. The converse is not true. A function can have zero, one or several local minimizers/maximizers. See Figure 1.2 for some examples.

0.1.2 Critical points

Under appropriate conditions, a local or global extremum of a function \(f\) is a critical point of \(f.\) For instance, if \(f:\mathbb{R}\to\mathbb{R}\) is differentiable, then any local extremum \(x\) is a critical point (i.e. \(f'(x)=0\)). Furthermore if \(f:\mathbb{R}\to\mathbb{R}\) is twice differentiable and \(x\in\mathbb{R}\) is s.t. \(f'(x)=0\) and \(f''(x)>0,\) then \(x\) is a local minimizer. In this section we will recall these and related facts for functions \(f\) with domain \(D\subset\mathbb{R}^{d},d\ge1.\)

Recall that for \(D\subset\mathbb{R}^{d},d\ge1,\) and a differentiable function \(f:D\to\mathbb{R}\) the gradient \(\nabla f(x)\) at \(x\in D\) is the vector \[\nabla f(x)=(\partial_{1}f(x),\ldots,\partial_{d}f(x))\in\mathbb{R}^{d},\] consisting of the first order partial derivatives of \(f.\) If \(f:D\to\mathbb{R}\) is twice differentiable, the Hessian \(\nabla^{2}f(x)\) at \(x\in D\) is the symmetric \(d\times d\) matrix \[\nabla^{2}f(x)=\left(\begin{matrix}\partial_{11}f(x) & \ldots & \partial_{1d}f(x)\\ \ldots & \ddots & \ldots\\ \partial_{d1}f(x) & \ldots & \partial_{dd}f(x) \end{matrix}\right)\in\mathbb{R}^{d\times d},\] consisting of all the second order mixed partial derivatives.

(Critical. point) Let \(D\subset\mathbb{R}^{d},d\ge1,\) and \(f:D\to\mathbb{R}.\) If \(x\in D\) and there is a open neighborhood \(U\) of \(x\) such that \(f|_{D\cap U}\) is differentiable, and \[\nabla f(x)=0\] then we call \(x\) a critical point of \(f.\)

(Saddle point) If \(x\in D\) is a critical point of \(f\) which is not a local extremum of \(f,\) we call \(x\) a saddle point.

Informally, Taylor expansion to second order for an \(f\) with domain \(D\subset\mathbb{R}^{d}\) reads \[f(y)=f(x)+(y-x)\cdot\nabla f(x)+(y-x)^{T}\frac{\nabla^{2}f(x)}{2}(y-x)+\ldots.\] If \(x\) is a critical point then \(\nabla f(x)=0,\) so \[f(y)=f(x)+\underset{\text{quadratic term}}{\underbrace{(y-x)^{T}\frac{\nabla^{2}f(x)}{2}(y-x)}}+\ldots,\] and the nature of the critical point is determined by the quadratic term, which in turn depends on the eigenvalues of the symmetric matrix \(\nabla^{2}f(x).\)

If \(D\subset\mathbb{R}^{d},d\ge1\) is an open domain and \(f:D\to\mathbb{R}\) is differentiable, then \[\lim_{y\ne x,y\to x}\frac{|f(x)+(y-x)\cdot\nabla f(x)-f(y)|}{|y-x|}=0\quad\text{for any}\quad x\in D.\tag{1.4}\]

If in addition \(f\) is twice continuously differentiable, then for any \(x\in D\) \[\lim_{y\ne x,y\to x}\frac{|f(x)+(y-x)\cdot\nabla f(x)+(y-x)^{T}\frac{\nabla^{2}f(x)}{2}(y-x)-f(y)|}{|y-x|^{2}}=0.\tag{1.5}\]

We now carefully state the conditions under which a local extremum is a critical point. For any set \(A\) in a topological space, let \(A^{\circ}\) denote its interior (so that e.g. the set \(A=[0,1)^{2}\) in the topology of \(\mathbb{R}^{2}\) has interior \(A^{\circ}=(0,1)^{2}\)).

Let \(d\ge1,\) \(D\subset\mathbb{R}^{d}\) and let \(f:D\to\mathbb{R}\) be a continuously differentiable function. If \(x\in D^{\circ}\) and \(x\) is a local extremum of \(f,\) then \(\nabla f(x)=0.\)

Proof. Let \(x\in D^{\circ}\) be a local extremum of \(f.\) Applying Taylor’s theorem (1.4) to \(f\) restricted to \(D^{\circ},\) we obtain that \[f(x+\Delta)\le f(x)+\nabla f(x)\cdot\Delta+|\Delta|r(\Delta)\] for \(\Delta\in\mathbb{R}^{d}\) small enough so that \(x+\Delta\in D^{\circ},\) where \(r(\Delta)\) is a function s.t. \(\lim_{\Delta\to0}r(\Delta)\to0.\) If \(\nabla f(x)\ne0,\) then with \(v=-\frac{\nabla f(x)}{|\nabla f(x)|}\) and \(\Delta=\varepsilon v\) it follows that \[f(x+\varepsilon v)\le f(x)-\varepsilon|\nabla f(x)|+\varepsilon\overline{r}(\varepsilon)=f(x)-\varepsilon(|\nabla f(x)|-\overline{r}(\varepsilon)),\] where \(\overline{r}(\varepsilon)=r(\varepsilon v)\to0\) as \(\varepsilon\downarrow0.\) There is a \(\delta>0\) s.t. \(\overline{r}(\varepsilon)<|\nabla f(x)|\) for \(\varepsilon\le\delta,\) which implies that the r.h.s. is strictly smaller than \(f(x).\) Thus \[f(x+\varepsilon v)<f(x)\quad\text{for all }\quad\varepsilon\in(0,\delta),\] proving that \(x\) is not a local minimizer. An analogous argument proves that \(x\) can not be a local maximizer. We have shown that if \(\nabla f(x)\ne0,\) then \(x\) is not a local extremum. This implies the claim of the lemma.

The converse is not true, since a point \(x\) s.t. \(\nabla f(x)=0\) may be a saddle point rather than a local extremum. Furthermore the condition that \(x\) lie in the interior of \(D\) is necessary, since if \(D\ne\mathbb{R}^{d},\) then there may exist local minima on the boundary \(\partial D\) that are not critical points, as in the last plot of Figure 1.2.

Next we consider the relationship between the second derivative(s) and properties of local extrema. We write \(A>B\) for \(d\times d\) matrices \(A,B\) if \(x^{T}(A-B)x>0\) for all \(x\in\mathbb{R}^{d}\setminus\{0\},\) and \(A\ge B\) if \(x^{T}(A-B)x\ge0\) for all \(x\in\mathbb{R}^{d}.\) In the expressions \(A>0\) and \(A\ge0\) the r.h.s.s. are interpreted as the matrix with all entries equal to zero.

Let \(d\ge1,\) \(D\subset\mathbb{R}^{d}\) and let \(f:D\to\mathbb{R}\) be a twice continuously differentiable function. Assume \(x\in D^{\circ}\) and \(\nabla f(x)=0.\)

a) If \(\nabla^{2}f(x)\) is positive definite (i.e \(\nabla^{2}f(x)>0\)), then \(x\) is a strict local minimum.

b) If \(\nabla^{2}f(x)\) is negative definite (i.e \(\nabla^{2}f(x)<0\)), then \(x\) is a strict local maximum.

c) If \(\nabla^{2}f(x)\) has a negative eigenvalue, then \(x\) is not a local minimum.

d) If \(\nabla^{2}f(x)\) has a positive eigenvalue, then \(x\) is not a local maximum.

e) If \(\nabla^{2}f(x)\) has both positive and negative eigenvalues, then \(x\) is a saddle point.

Proof. a) Assume that \(x\in D^{\circ},\nabla f(x)=0\) and \(\nabla^{2}f(x)>0.\) By Taylor’s theorem (1.5) there is a function \(r(\Delta)\) s.t. \(\lim_{\Delta\to0}r(\Delta)=0\) and \[f(x+\Delta)=f(x)+\Delta^{T}\frac{\nabla^{2}f(x)}{2}\Delta+|\Delta|^{2}r(\Delta)\:\forall\Delta\in\mathbb{R}^{d}\text{ s.t. }x+\Delta\in D^{\circ}.\tag{1.6}\] Since \(\nabla^{2}f(x)\) is positive definite, there is an \(a>0\) s.t. \[\Delta^{T}\nabla^{2}f(x)\Delta\ge a|\Delta|^{2}\quad\text{for all }\quad\Delta\in\mathbb{R}^{d}.\] Thus \[f(x+\Delta)\ge f(x)+\frac{a}{2}|\Delta|^{2}+|\Delta|^{2}r(\Delta)\ge f(x)+|\Delta|^{2}\left(\frac{a}{2}-|r(\Delta)|\right)\] for \(\Delta\) s.t. \(x+\Delta\in D^{\circ}.\) Since \(\lim_{\Delta\to0}r(\Delta)=0\) there a \(\delta>0\) s.t. \(|r(\Delta)|\le\frac{a}{4}\) whenever \(|\Delta|\le\delta.\) This implies that \[f(x+\Delta)\ge f(x)+|\Delta|^{2}\frac{a}{4}>f(x)\quad\text{for all }\quad\Delta\text{ s.t. }0<|\Delta|\le\delta.\] Thus we have proved that \(x\) is a strict local minimum. The proof of a) is complete.

The claim b) is proved analogously with reverse inequalities, or by applying a) to \(x\to-f(x).\)

c) If \(\nabla^{2}f(x)\) has a negative eigenvalue, then let \(v\) be a corresponding unit eigenvector. Using (1.6) for \(\Delta=\varepsilon v\) one can show that \(f(x+\varepsilon\Delta)<f(x)\) for all small enough \(\varepsilon>0,\) which means \(x\) can not be a local minimum.

d) This follows by applying the argument of c) with inequalities reversed, or applying c) to \(x\to-f(x).\)

e) This is a direct consequence of c,d).

If \(\nabla f(x)=0\) and \(\nabla^{2}f(x)=0\) then \(x\) can be a local minimum, a local maximum or neither, and these different possibilities can not be distinguished using only information about the first and second derivatives at \(x.\) Similarly, if \(\nabla f(x)=0\) and \(\nabla^{2}f(x)\ne0,\nabla^{2}f(x)\ge0\) (so that \(\nabla^{2}f(x)\) has both zero and a positive number as eigenvalues), then we can neither rule out that \(x\) is a local minimum, nor confirm that it is such a local minimum - but we can rule out that it is a local maximum.

0.1.3 Convexity and concavity

Convexity (or concavity) is very useful when optimizing. For instance, as well recall below, for convex function all local minimizer are guaranteed to be global minimizers. First recall the concept of a convex set.

Let \(V\) be a vector space over \(\mathbb{R}\) or \(\mathbb{C}.\) A subset \(A\subset V\) is convex if the line segment between any two points \(x,y\) in \(A\) is contained in \(A,\) i.e. if \[\theta x+(1-\theta)y\in A\quad\text{for all }\quad x,y\in A,\theta\in[0,1].\]

1.10. We will mainly only consider convex subsets of the vector spaces \(V=\mathbb{R}^{d},d\ge1.\)

Next we recall the concept of convexity and concavity of functions.

Let \(V\) be a vector space over \(\mathbb{R}\) or \(\mathbb{C},\) and let \(D\subset V\) be a convex set.

[Convex func.] A function \(f:D\to\mathbb{R}\) is convex if \[\theta f(x)+(1-\theta)f(y)\ge f(\theta x+(1-\theta)y)\:\ \text{for all}\:\ x,y\in D,\theta\in[0,1].\] The function is strictly convex if this inequality holds strictly for all \(x,y\in D,\theta\in(0,1).\)

[Concave func.] The function \(f\) is concave if \[\theta f(x)+(1-\theta)f(y)\le f(\theta x+(1-\theta)y)\:\ \text{for all}\:\ x,y\in D,\theta\in[0,1],\] (i.e. if \(-f\) is convex), and strictly concave if the inequality holds strictly for all \(x,y\in D,\theta\in(0,1)\) (i.e. if \(-f\) is strictly convex).

1.12. Again, we will only consider convex/concave functions when \(D\subset V=\mathbb{R}^{d},d\ge1.\)

Now we turn to the aforementioned property of the extrema of convex resp. concave functions.

Let \(d\ge1,\) let \(D\subset\mathbb{R}^{d}\) be a convex set and \(f:D\to\mathbb{R}\) a function. Let \(x\in D.\)

a) If \(f\) is convex, then \(x\) is local minimizer iff it is a global minimizer.

b) If \(f\) is strictly convex , then \(f\) has at most one local and global minimizer.

c) If \(f\) is concave, then \(x\) is local maximum iff it is a global maximizer.

d) If \(f\) is strictly concave, then \(f\) has at most one local and global maximizer.

Proof. a) Assume \(f\) is convex, and assume for contradiction that \(x\in D\) is a local minimizer which is not a global minimizer. Then there is a \(y\in D\) s.t. \(f(y)<f(x).\) By convexity \[f(\varepsilon x+(1-\varepsilon)y)\le\varepsilon f(x)+(1-\varepsilon)f(y)\quad\forall\varepsilon\in[0,1].\] Since \(f(y)<f(x)\) we have \[\varepsilon f(x)+(1-\varepsilon)f(y)<f(x)\quad\forall\varepsilon\in(0,1].\] Thus \[f(\varepsilon x+(1-\varepsilon)y)<f(x)\quad\forall\varepsilon\in(0,1],\] which contradicts \(x\) being a local minimizer.

b) Assume \(f\) is strictly convex, and assume for contradiction that \(x,y\in D\) are distinct global minimizers. Then \(\min_{z\in D}f(z)=f(x)=f(y).\) By strict convexity \[f(\varepsilon x+(1-\varepsilon)y)<\varepsilon f(x)+(1-\varepsilon)f(y)\forall\varepsilon\in(0,1).\] The r.h.s. is just \(\min_{z\in D}f(z),\) so for instance for setting \(\varepsilon=\frac{1}{2}\) we obtain that \[f\left(\frac{x+y}{2}\right)<\min_{z\in D}f(z),\] where \(\frac{x+y}{2}\in D.\) This contradicts the definition of global minimum value. Thus there are no distinct \(x,y\in D\) that are both global minimizers, so \(f\) has at most one global minimizer.

c,d) This follows by reversing the inequalities in the argument for a,b) above, or applying a,b) to \(x\to-f(x).\)

A strictly convex function can can have zero or one global minimizer, while a non-strictly convex function can have zero, one, several or infinitely many global minimizers. The set of global minimizers must be convex. It can not have any local minimizers that are not global minimizers. For concave functions the same applies to maximizers. The next figure shows some examples.

Next we recall that the sum and maximum of convex functions is again a convex function, and the sum and minimum of concave function is again a concave function.

\(\,\)

a) If \(f_{1},f_{2}\) are convex functions with the same domain then \(f_{1}+f_{2}\) and \(\max(f_{1},f_{2})\) are convex functions. More generally, for a set \(I\) of any cardinality (including uncountable) and a family \(f_{i},i\in I,\) of convex functions indexed by \(i\in I,\) all with the same domain \(D,\) the function \[x\to\sup_{i\in I}f_{i}(x)\] with domain \(D_{I}=\{x\in D:\sup_{i\in I}f_{i}(x)<\infty\}\) is a convex function.

b) If \(f_{1},f_{2}\) are concave functions with the same domain then \(f_{1}+f_{2}\) and \(\min(f_{1},f_{2})\) are concave functions. For a set \(I\) of any cardinality and a family \(f_{i},i\in I,\) of concave functions indexed by \(i\in I\) all with the same domain \(D,\) then the function \[x\to\inf_{i\in I}f_{i}(x)\] with domain \(D_{I}=\{x\in D:\inf_{i\in I}f_{i}(x)>-\infty\}\) is a concave function.

Proof. a) If \(f_{1},f_{2}:D\to\mathbb{R}\) are convex then \(g=f_{1}+f_{2}\) satisfies \[\begin{array}{ccl} g(\theta x+(1-\theta)y) & = & f_{1}(\theta x+(1-\theta)y)+f_{2}(\theta x+(1-\theta)y)\\ & \le & \theta f_{1}(x)+(1-\theta)f_{2}(y)+\theta f_{2}(x)+(1-\theta)f_{2}(y)\\ & = & \theta g(x)+(1-\theta)g(y), \end{array}\] for any \(x,y\in D,\theta\in[0,1].\) Thus \(g\) is convex.

Define \(h:D_{I}\to\mathbb{R}\) by \[h(x)=\sup_{i\in I}f_{i}(x).\] It satisfies \[\begin{array}{ccl} h(\theta x+(1-\theta)y) & = & \sup_{i\in I}f_{i}(x\theta+(1-\theta)y)\\ & \le & \sup_{i\in I}(\theta f_{i}(x)+(1-\theta)f_{i}(y))\\ & \le & \theta\sup_{i\in I}f_{i}(x)+(1-\theta)\sup_{i\in I}f_{i}(y)\\ & = & \theta h(x)+(1-\theta)h(y), \end{array}\] for any \(x,y\in D,\theta\in[0,1].\) Thus \(h\) is convex.

The claim b) follows by changing the direction of the inequalities in the arguments above, or by applying \(a)\) to \(x\to-f_{i}(x).\)

Next we recall that for sufficiently differentiable functions, convexity is equivalent to having everywhere non-negative second derivative in the univariate case, and more generally everywhere positive semidefinite Hessian. Concavity is equivalent to non-positive second derivative or more generally to everywhere negative semi-definite Hessian.

Let \(d\ge1\) and let \(D\subset\mathbb{R}^{d}\) be open and convex. Assume \(f:D\to\mathbb{R}\) is twice continuously differentiable.

a) The function \(f\) is convex iff \(\nabla^{2}f(x)\ge0\) for all \(x\in D.\)

b) If \(\nabla^{2}f(x)>0\) for all \(x\in D,\) then \(f\) is strictly convex.

c) The function \(f\) is concave iff \(\nabla^{2}f(x)\le0\) for all \(x\in D.\)

d) If \(\nabla^{2}f(x)<0\) for all \(x\in D,\) then \(f\) is strictly concave.

1.16. Note that a,c) are “iffs”, but not b,d). Indeed for instance \(f(x)=x^{4}\) is strictly convex, but \(f''(0)=0,\) so the converse of b) (and d)) is not true. Optional exercise: prove that \(f:\mathbb{R}\to\mathbb{R}\) is strictly convex iff \(f''(x)\ge0\) for all \(x\in\mathbb{R}\) and \(f''(x)=0\) for at most one \(x\in\mathbb{R}.\)

Proof. \(a)\implies\) Assume \(f\) is convex. For any \(x\in D,\) Taylor’s theorem (1.5) states that \[f(x+\Delta)=f(x)+(\nabla f(x))\cdot\Delta+\frac{\Delta^{T}\nabla^{2}f(x)\Delta}{2}+|\Delta|^{2}r(\Delta)\tag{1.10}\] for all \(\Delta\) s.t. \(x+\Delta\in D,\) where \(r\) is a function such that \(\lim_{\Delta\to0}r(\Delta)=0.\) By the convexity of \(f\) \[\frac{f(x+\Delta)+f(x-\Delta)}{2}\ge f(x)\] for all \(\Delta.\) Combining with (1.10) we get that \[f(x)+\Delta^{T}\frac{\nabla^{2}f(x)}{2}\Delta+|\Delta|^{2}\frac{r(\Delta)+r(-\Delta)}{2}\ge f(x)\] or equivalently \[\frac{\Delta^{T}\nabla^{2}f(x)\Delta}{|\Delta|^{2}}\ge-(r(\Delta)+r(-\Delta)).\] For any unit vector \(v\) we can set \(\Delta=\varepsilon v\) and take the limit \(\varepsilon\downarrow0\) to obtain \[v^{T}\nabla^{2}f(x)v\ge0.\] Since this holds for all unit vectors \(v,\) it follows that \(\nabla^{2}f(x)\ge0.\)

\(a)\impliedby\) Assume \(\nabla^{2}f(x)\) is everywhere positive semidefinite. Let \(x,y\in D\) and consider \[g(\theta)=f(\theta x+(1-\theta)y)-(\theta f(x)+(1-\theta)f(y)),\theta\in[0,1].\] Firstly \[g(0)=g(1)=0.\tag{1.11}\] Furthermore \[\begin{array}{ccl} \frac{d}{d\theta}f(\theta x+(1-\theta)y) & = & \sum_{i=1}^{d}\partial_{i}f(\theta x+(1-\theta)y))\cdot\frac{d}{d\theta}\left\{ \theta x_{i}+(1-\theta)y_{i}\right\} \\ & = & \sum_{i=1}^{d}\partial_{i}f(\theta x+(1-\theta)y))(x_{i}-y_{i})\\ & = & (\{\nabla f\}(\theta x+(1-\theta)y))\cdot(x-y). \end{array}\] Taking another derivative we obtain \[\begin{array}{ccl} \frac{d^{2}}{d\theta^{2}}f(\theta x+(1-\theta)y) & = & \frac{d}{d\theta}\sum_{i=1}^{d}\partial_{i}f(\theta x+(1-\theta)y))(x_{i}-y_{i})\\ & = & \sum_{j=1}^{d}\sum_{i=1}^{d}\partial_{ij}f(\theta x+(1-\theta)y)(x_{i}-y_{i})\frac{d}{d\theta}(\theta x+(1-\theta)y)\\ & = & \sum_{j=1}^{d}\sum_{i=1}^{d}\partial_{ij}f(\theta x+(1-\theta)y)(x_{i}-y_{i})(x_{j}-y_{j})\\ & = & (x-y)^{T}\{\nabla^{2}f\}(\theta x+(1-\theta)y)(x-y). \end{array}\] Thus \[g''(\theta)=(x-y)^{T}(\{\nabla f\}(\theta x+(1-\theta)y)(x-y),\] so \(g''(\theta)\ge0\) for all \(\theta\in[0,1].\) Thus \(g'\) is non-decreasing. By the mean value theorem there is a \(\theta_{*}\in[0,1]\) such that \(g'(\theta_{*})=0.\) Since \(g'\) is non-decreasing this means that \(g'(\theta)\le0\) for \(\theta\in[0,\theta_{*}]\) and \(g'(\theta)\ge0\) for \(\theta\in[0,\theta_{*}].\) This and (1.11) implies that \(g(\theta)\ge0\) for all \(\theta\in[0,1],\) proving that \[f(\theta x+(1-\theta)y)\ge\theta f(x)+(1-\theta)f(y),\theta\in[0,1].\] Since this holds for any \(x,y\in D,\) the function \(f\) is convex.

b) follows by reversing the inequalities in the argument above, or applying a) to \(x\to-f(x).\)

0.1.4 Simple optimization problems

Let us consider the general class of optimization problems \[\text{minimize }f,\text{ where }f:D\to\mathbb{R},D\subset\mathbb{R}^{d},d\ge1.\] Since maximizing \(f(x)\) is the same as minimizing \(-f(x),\) this form covers both minimization and maximization. We call the \(f\) to be optimized the objective function.

Consider the optimization problem \[\text{minimize }\frac{x^{4}}{4}-\frac{x^{2}}{2}\text{\,for }x\in\mathbb{R}.\tag{1.12}\] This problem has two global minimizers at \(x=\pm1,\) and the minimum is \(f(\pm1)=-\frac{1}{2}.\)

Solution:

The objective function \(f(x)=\frac{x^{4}}{4}-\frac{x^{2}}{2}\) is infinitely differentiable with derivative \(f'(x)=x^{3}-x,\) so the critical point equation is \[x^{3}-x=0\iff x(x^{2}-1)=0\iff x\in\{0,-1,1\}.\] Thus \(f\) has the three critical points \(x\in\{0,-1,1\},\) and any local or global minimizers must belong to this set. Since \(f''(x)=3x^{2}-1\) is positive for \(x=\pm1\) and negative for \(x=0,\) we conclude that \(x=\pm1\) are local minimizers while \(x=0\) is a local maximizer. By symmetry \(x=\pm1\) yield the same value of the objective function, so they are global minimizers as long as one can not achieve a lower value of \(f\) by taking \(x\to\infty\) or \(x\to-\infty.\) Since the term \(x^{4}\) dominates as \(x\to\pm\infty\) we in fact have \(\lim_{x\to\pm\infty}f(x)=\infty.\) Thus \(x=\pm1\) are indeed global minimizers and the global minimum value of (1.12) is \[f(\pm1)=\frac{1}{4}-\frac{1}{2}=-\frac{1}{2}.\] Alternatively, instead of computing the second derivative one can simply compute \(f(x)\) at all critical points and compare the values. For this problem one gets \(f(0)=0\) and \(f(\pm1)=-\frac{1}{2},\) which allows one to rule out \(x=0\) as a global minimizer.

Consider the optimization problem \[\text{maximize }e^{-\frac{x^{2}}{2}}\left(\frac{x}{4}-x^{2}-1\right)\text{ for }x\in\mathbb{R}.\tag{1.13}\] This problem has three critical point, the largest one being a local maximum at \(x=\frac{1}{4}.\) But the global maximum is achieved for \(x\to\pm-\infty.\)

Solution: We note that the objective function \[f(x)=e^{-\frac{x^{2}}{2}}\left(\frac{x}{4}-x^{2}-1\right)\] is infinitely differentiable, so any local or global maximizer must be a critical point \(x\) with \(f''(x)\le0.\) We compute \[f'(x)=e^{-\frac{x^{2}}{2}}\left(\frac{1}{4}-2x-\frac{x^{2}}{4}+x^{3}+x\right)=e^{-\frac{x^{2}}{2}}\left(x^{3}-\frac{1}{4}x^{2}-x+\frac{1}{4}\right).\] Thus the critical point equation \(f'(x)=0\) is equivalent to \[x^{3}-\frac{1}{4}x^{2}-x+\frac{1}{4}=0.\] By inspection we notice that \(x=1\) is a root of this polynomial, which can then by factored as \[(x-1)\left(x^{2}+\frac{3}{4}x-\frac{1}{4}\right)=0\] The quadratic \(x^{2}+\frac{3}{4}x-\frac{1}{4}\) has solutions \(x=-1\) and \(x=\frac{1}{4}.\) Thus all critical points of \(f\) are \[x=-1,\quad x=\frac{1}{4},\quad x=1.\] Simply evaluating \(f\) at these points we get the objective function values \[f(-1)=-\frac{9}{4}e^{-\frac{1}{2}},\quad f\left(\frac{1}{4}\right)=-e^{-\frac{1}{32}},\quad f(1)=-\frac{7}{4}e^{-\frac{1}{2}}.\] Note that clearly \(f(1)>f(-1).\) Also \(-e^{-\frac{1}{32}}\approx-0.97\) and \(-\frac{7}{4}e^{-\frac{1}{2}}\approx-1.06,\) so \(f(\frac{1}{4})>f(1).\) Thus \(x=\frac{1}{4}\) is the critical point with the largest value. But \(\lim_{x\to\pm}f(x)=0>f\left(\frac{1}{4}\right),\) so in fact the value of (1.13) of \(f\) is \(0,\) and there is no global maximizer.

Consider the optimization problem \[\text{minimize }\frac{1}{4}x^{4}-\frac{1}{2}x^{2}+\frac{1}{4}y^{4}-y^{2}-\frac{x^{2}y^{2}}{8}\text{ for }x,y\in\mathbb{R}.\tag{1.14}\] This problem has several local minima, of which \(\left(\frac{2\sqrt{2}}{\sqrt{5}},\frac{2\sqrt{3}}{\sqrt{5}}\right)\) is the global minimizer.

Solution:

The objective function \[f(x,y)=\frac{1}{4}x^{4}-\frac{1}{2}x^{2}+\frac{1}{4}y^{4}-y^{2}-\frac{x^{2}y^{2}}{8}\] is infinitely differentiable and has gradient \[\nabla f(x,y)=\left(\begin{matrix}x^{3}-x-\frac{xy^{2}}{4}\\ y^{3}-2y-\frac{x^{2}y}{4} \end{matrix}\right).\] The critical point equation is thus the system \[\begin{cases} x^{3}-x-\frac{xy^{2}}{4} & =0,\\ y^{3}-2y-\frac{x^{2}y}{4} & =0. \end{cases}\] Factoring out \(x\) and \(y\) these turn into \[\begin{cases} x(x^{2}-1-\frac{y^{2}}{4}) & =0,\\ y(y^{2}-2-\frac{x^{2}}{4}) & =0. \end{cases}\] Thus \(x=y=0\) is a solution. A solution with \(x\ne0\) and \(y=0\) must satisfy \(x^{2}-1=0,\) so \((x,y)=(\pm1,0)\) are solutions. Similarly a solution with \(x=0\) and \(y\ne0\) must satisfy \(y^{2}-2=0,\) so \((x,y)=(0,\pm\sqrt{2})\) are solutions. Lastly a solution with \(x\ne0,y\ne0\) must satisfy \[\begin{cases} x^{2}-1-\frac{y^{2}}{4} & =0,\\ y^{2}-2-\frac{x^{2}}{4} & =0. \end{cases}\] This is a linear system in \(x^{2},y^{2}\) with unique solution \[x^{2}=\frac{8}{5},\quad\quad y^{2}=\frac{12}{5}.\] Thus \(\left(\pm2\sqrt{\frac{2}{5}},\pm2\sqrt{\frac{3}{5}}\right)\) are also solutions. We have found a total of \(1+2+2+4=9\) critical points. Taking into account symmetries of \(f\) this reduces to the four solutions \((0,0),(1,0),(0,\sqrt{2}),\left(2\sqrt{\frac{2}{5}},2\sqrt{\frac{3}{5}}\right).\) We attempt to classify them as local minimizers, maximizers or saddle points by considering the Hessian \[\nabla^{2}f(x,y)=\left(\begin{matrix}3x^{2}-1-\frac{y^{2}}{4} & -\frac{xy}{2}\\ -\frac{xy}{4} & 3y^{2}-2-\frac{x^{2}}{4} \end{matrix}\right).\] At \((x,y)=(0,0)\) we obtain \[\nabla^{2}f(0,0)=\left(\begin{matrix}-1 & 0\\ 0 & -2 \end{matrix}\right)\] which is negative definite, so \((0,0)\) is a local maximum. At \((x,y)=(1,0)\) we obtain \[\nabla^{2}f(1,0)=\left(\begin{matrix}2 & 0\\ 0 & -2 \end{matrix}\right)\] with positive and negative eigenvalues, so \((1,0)\) is a saddle point. At \((x,y)=(0,\sqrt{2})\) we obtain the negative definite \[\nabla^{2}f(0,\sqrt{2})=\left(\begin{matrix}-\frac{3}{2} & 0\\ 0 & -\frac{1}{2} \end{matrix}\right),\] so \((0,\sqrt{2})\) is a local maximum. Lastly at \((x,y)=\left(2\sqrt{\frac{2}{5}},2\sqrt{\frac{3}{5}}\right)\) we obtain \[\nabla^{2}f(x,y)=\frac{2}{15}\left(\begin{matrix}8 & -\sqrt{6}\\ -\sqrt{6} & 12 \end{matrix}\right).\tag{1.15}\] The sign of the eigenvalues of (1.15) can either by obtained by explicitly computing them, or by computing \[{\rm Tr}\:\left(\begin{matrix}8 & -\sqrt{6}\\ -\sqrt{6} & 12 \end{matrix}\right)>0\quad\text{and}\quad\det\left(\begin{matrix}8 & -\sqrt{6}\\ -\sqrt{6} & 12 \end{matrix}\right)=8\times12-6>0,\] and noting that a \(2\times2\) matrix with positive trace and determinant has two positive eigenvalues. This shows that \((x,y)=\left(2\sqrt{\frac{2}{5}},2\sqrt{\frac{3}{5}}\right)\) is a local minimum. Thus our candidate for the value of (1.14) is \[f(x,y)=\left(2\sqrt{\frac{2}{5}},2\sqrt{\frac{3}{5}}\right)=-\frac{8}{5}.\] Before we can conclude that this is the minimum value, we must verify that one cannot achieve a lower value as the limit of a sequence \((x_{n},y_{n})\) diverging to infinity. We can do so by noting that the quartic terms in \(f(x,y)\) dominate as \(x,y\to\infty,\) and they satisfy \[\frac{1}{4}x^{4}+\frac{1}{2}y^{4}-\frac{x^{2}y^{2}}{8}\ge\frac{1}{4}\max(|x|,|y||)^{4}-\frac{\max(|x|,|y|)^{4}}{8}=\frac{\max(|x|,|y|)^{4}}{8}.\] Thus \(f(x,y)\to\infty\) as \((x,y)\to\infty,\) so the local minimizer \(\left(\frac{2\sqrt{2}}{\sqrt{5}},\frac{2\sqrt{3}}{\sqrt{5}}\right)\) is indeed a global minimizer, (1.14) is \(-\frac{8}{5}.\)

Consider the optimization problem \[\text{minimize }x^{3}-x^{2}+x^{2}y^{2}\quad\text{for}\quad x,y\in[-3,3].\tag{1.16}\] For this problem the optimum is achieved at the boundary, and is not a critical point.

Solution: We note that the objective function \[f(x,y)=x^{3}-x^{2}+x^{2}y^{2}\] has domain bounded \[D=[-3,3]^{2},\] and is infinitely differentiable, so any local or global minimizer in \((-3,3)^{2}\) (the interior of \(D\)) must be a critical point \(x\) with \(\nabla^{2}f(x)\ge0.\) We compute \[\nabla f(x,y)=\left(\begin{matrix}3x^{2}-2x+2xy^{2}\\ 2x^{2}y \end{matrix}\right).\] Thus the critical point equation \[\nabla f(x,y)=0\] is equivalent to the system \[\begin{cases} 3x^{2}-2x+2xy^{2} & =0,\\ 2x^{2}y & =0. \end{cases}\] It is easy to see that \((0,y)\) is a solution for any \(y,\) and any solution \((x,y)\) with \(x\ne0\) must satisfy \(y=0\) and \[3x-2=0\iff x=\frac{2}{3}.\] Evaluating \(f\) at the solutions we obtain \[f(0,y)=0\ \forall y\quad\text{and}\quad f\left(\frac{2}{3},0\right)=-\frac{4}{27}.\] Thus \((x,y)=\left(\frac{2}{3},0\right)\) is the critical point with the lowest objective function value. But before concluding that this is the solution of (1.16), we must consider \(f\) on the boundaries of \(D,\) that is for \(x=\pm3,y\in[-3,3]\) and \(x=[-3,3],y=\pm3.\) Evaluating \(f\) on these sets we obtain \[\begin{array}{lcc} f(3,y)=18+9y^{2}\ge0, & & f(-3,y)=-36+9y^{2},\\ f(x,3)=f(x,-3)=8x^{2}+x^{3}. \end{array}\] We see that on the boundary with \(x=3\) no value is achieved which “beats” \(f\left(\frac{2}{3},0\right)=-\frac{4}{27},\) but on the boundary with \(x=-3\) the point \((x,y)=(-3,0)\) achieves the strictly lower value \(f(-3,0)=-36.\) Finally to find the lowest point on the boundaries \(y=\pm3\) we must solve the optimization problem \[\text{Minimize }8x^{2}+x^{3}\text{ for }x\in[-3,3].\tag{1.17}\] We solve this by noting that the critical point equation is \[16x+3x^{2}=0\] with solutions \[x_{1}=0,\quad x_{2}=-\frac{16}{3},\] corresponding to the objective function values \[x_{1}^{2}+x_{1}^{3}=0,\quad8x_{2}^{2}+3x_{2}^{3}=x_{2}^{2}(8+3x_{2})=x_{2}^{2}\frac{56}{9}\ge0.\] Thus these do not “beat” the point \(f(-3,0)=-36.\) Before we conclude that this is the solution, we must check the boundaries of the “subproblem” (1.17), noting that for \(x_{\pm}=\pm3\) we have \[-9=x_{-}^{2}(8+3x_{-})=8x_{-}^{2}+x_{-}^{3}<8x_{+}^{2}+x_{+}^{3}.\] Thus these points do not “beat” the point \(f(-3,0)=-36.\) We can conclude that the solution to (1.16) is \(-36,\) achieved uniquely at \((x,y)=(-3,0).\)

Consider the optimization problem \[\text{minimize }\:\frac{1}{2}p\log p+\frac{1}{2}(1-p)\log(1-p)+p\text{ for }p\in[0,1].\] One can show that the objective function of this problem is convex, and one can also find a critical point. By convexity this critical point is automatically the global minimizer.

Solution: The solution can be simplified by noting that the objective function \[f:[0,1]\to\mathbb{R},\quad\quad f(p)=\frac{1}{2}p\log p+\frac{1}{2}(1-p)\log(1-p)+p\] is convex. Indeed, it is infinitely differentiable on \((0,1)\) with derivative \[f'(p)=\frac{1}{2}\log p-\frac{1}{2}\log(1-p)+\frac{1}{2}-\frac{1}{2}+1\] and second derivative \[f''(p)=\frac{1}{2p}+\frac{1}{2(1-p)}>0\text{ for all }p\in(0,1).\] Thus actually \(f\) is even strictly convex on \([0,1].\) This means that if a critical point of \(f\) exists, it is the global minimizer - we do not have to worry about the boundaries of \([0,1].\) We thus proceed to solve the critical point equation \[\begin{aligned}f'(p)=0\iff\frac{1}{2}\log p-\frac{1}{2}\log(1-p)+1=0\iff & \frac{p}{1-p}=e^{-2}\\ \iff & p=\frac{1}{1+e^{2}}. \end{aligned}\] Since we have successfully found a critical point of this convex function, we can already conclude that this is the global minimizer, giving global minimum value \[\begin{aligned}f\left(\frac{1}{1+e^{2}}\right) & =-\frac{1}{2}\frac{1}{1+e^{2}}\log(1+e^{2})-\frac{1}{2}\frac{e^{2}}{1+e^{2}}\log\left(\frac{1+e^{2}}{e^{2}}\right)+\frac{1}{1+e^{2}}\\ & =\frac{e^{2}}{1+e^{2}}+\frac{1}{1+e^{2}}-\frac{1}{2}\log(1+e^{2})\\ & =1-\frac{1}{2}\log(1+e^{2}). \end{aligned}\]

To summarize, the take home message about this basic kind of optimization problem is: \[\text{Solve the critical point equation!}\] All the while: \[\begin{array}{c} \text{Remember to check for things that don't}\\ \text{show up as solutions to the critical point equation!}\\ \implies\text{extrema at boundaries}\\ \implies\text{infima/suprema achieved only as limits, rather than by global extrema.} \end{array}\]

0.2 Constrained optimization using Lagrange multipliers

The problem \[\text{minimize }\,\:(x-y)^{2}-x-y\,\:\text{ subject to }\,\:\left(x-\frac{1}{4}\right)^{2}+2y^{2}=\frac{1}{2}\tag{2.1}\] is a constrained optimization problem, because to solve it one must optimize the objective function \(f(x)\) not over all \(x,\) but only over \(x\) that satisfy the constraint \(\left(x-\frac{1}{4}\right)^{2}+2y^{2}=\frac{1}{2}.\) Such problems are common in many applications. For instance, imagine we plan to build a shed with dimensions \(x\times y\times z\) meters, and the cost of the materials is \(R\) CHF/\(m^{2}\) for the roof, \(W\) CHF/\(m^{2}\) for the walls and \(F\) CHF/\(m^{2}\) for the floor. Then for given dimensions \(x,y,z\) the total cost is \((F+R)xy+W(2xz+2yz).\) The largest volume shed we can build with a budgetary constraint of \(4000\) CHF is the solution of constrained optimization problem \[\text{maximize }\,xyz\:\text{ subject to }\begin{cases} (F+R)xy+W(2xz+2yz)\le4000,\\ x\ge0,y\ge0,z\ge0. \end{cases}\]

A general form of a constrained optimization problem is given by the following definition.

For \(d\ge1,\) domain \(D\subset\mathbb{R}^{d},\) objective function \(f:D\to\mathbb{R}\) and constraint function \({h:D\to\mathbb{R}}\) we call \[\text{minimize /maximize}\,\:f(x),x\in D\,\:\text{ subject to }\,\:h(x)=0,\tag{2.3}\] an optimization/minimization/maximization problem with one equality constraint.

Since maximizing subject to the constraint \(h(x)=0\) is equivalent to minimizing \(-f(x)\) subject to the constraint we only need to consider the minimization form of (2.3).

Solving the critical point equation \[\nabla f(x)=0\tag{2.4}\] of the objective function is not helpful in finding optima of the constrained optimization problem (2.3), since a critical point of \(f\) will in general not satisfy the constraint. There is however a different but related equation that can be solved to find optima for constrained problems.

0.2.1 Lagrange multipliers

Assume \(d\ge k\ge1,\) \(D\subset\mathbb{R}^{d},\) \(f:D\to\mathbb{R}\) and \(h:D\to\mathbb{R}^{k}.\) Let \(I_{h}=\{x\in D:h(x)=0\}\) and let \(f|_{I_{h}}:I_{h}\to\mathbb{R}\) denote the restriction of \(f\) to \(I_{h}.\) A local optimum/minimum/maximum of \(f|_{I_{h}}\) is called a constrained extremum/minimum/maximum of the constrained optimization problem (2.3).

Note that being a local extremum of \(f|_{I_{h}}\) is a weaker condition than being a local extremum of \(f.\)

(Lagrange multiplier condition - \(d\) equality constraints). If \(d\ge1,\) \(D\subset\mathbb{R}^{d},\) \(f,g:D\to\mathbb{R}\) are differentiable and \(\lambda\in\mathbb{R},\) then we call \[\begin{aligned} \nabla f(x) & =\lambda\nabla h(x),\tag{}\\ h(x) & =0,\tag{} \end{aligned}\] the Lagrange multiplier conditions of the constrained optimization problem (2.3).

Subject to appropriate assumptions, any constrained local optimum of (2.3) must satisfy the Lagrange multiplier conditions ([eq:lagrange_conditions_eq1])-([eq:lagrange_conditions_eq2]) (see Theorem 2.20 below for a rigorous statement). The condition ([eq:lagrange_conditions_eq1]) has the following geometric formulation: \[``\nabla f(x)\text{ is perpendicular to the tangent plane of the surface}I_{h}\text{ at }x''.\tag{2.5}\] See Figures 2.1-2.2 for an illustration. To see why a constrained local optimum will (usually) satisfy (2.5), note that:

If \(h(x)\) is sufficiently smooth, then the condition \(h(x)=0\) defines a smooth \(d-1\)-dimensional surface embedded in \(\mathbb{R}^{d}\) (i.e. a manifold). The tangent (hyper)plane \(x+T\) at a point \(x\) is the plane passing through \(x\) which is perpendicular to \(\nabla h(x).\)

For any direction \(v\in\mathbb{R}^{d}\) s.t. \(\nabla f(x)\cdot v<0,\) one can move a sufficiently small distance in the direction of \(v\) to arrive at a point \(x'=x+\varepsilon v\) with a strictly smaller value of the objective \(f(x')<f(x).\)

If \(x\) is a point in \(I_{h}\) and \(v\) is the projection of \(-\nabla f(x)\) onto \(T\) and \(v\ne0,\) then we can move inside the constraint set \(I_{h}\) on a trajectory that is approximately a straight line in the direction \(v.\) Therefore by \(2.\) we can reduce the value of the objective function \(f(x)\) while maintaining the constraint. Such a point \(x\) can not be a constrained local minimum.

Thus, generally speaking, a constrained local minimum \(x\) of the minimization problem (2.3) must have a projection of \(-\nabla f(x)\) onto \(T\) which vanishes, which is equivalent to (2.5), and to \(\nabla f(x)=\lambda\nabla h(x)\) holding for some \(\lambda\in\mathbb{R}.\)

0.2.2 Detailed heuristic discussion

For the optimization problem with the constraint \({h(x)=0},\) the equivalent of the critical point equation \(\nabla f(x)=0\) from unconstrained setting is the Lagrange multiplier condition \[\nabla f(x)=\lambda\nabla h(x).\tag{2.6}\] Below you will see how the method of Lagrange multipliers uses this equation in practice. But first, let us heuristically derive the equation (2.6) using geometrical intuition.

For any constraint \(h(x)=0,\) let \(I_{h}\) denote the subset of \(\mathbb{R}^{d}\) of points that satisfy the constraint, i.e. \(I_{h}=\{x\in\mathbb{R}^{d}:h(x)=0\}.\) Consider \(f|_{I_{h}},\) the objective function \(f\) restricted to this smaller domain. We will argue that if \(x\in I_{h}\) is a local minimum of \(f|_{I_{h}},\) then it satisfies (2.6) for some \(\lambda\) (at least usually). Cf. Lemma 1.7, which proves that if \(x\in\mathbb{R}^{d}\) is a local minimum of \(f\) (without constraint/restriction), then it satisfies (2.4). Note that being a local minimum of \(f|_{I_{h}}\) is a weaker condition than being a local minimum of \(f,\) since the latter condition requires the existence of an \(\varepsilon>0\) s.t. \(f(x)\le f(y)\) whenever \(y\in\mathbb{R}^{d}\) and \(|x-y|\le\varepsilon,\) while the former condition requires \(f(x)\le f(y)\) only whenever \(y\in I_{h}\) and \(|x-y|\le\varepsilon.\)

Gradients and descent directions

For a differentiable function \({f:\mathbb{R}^{d}\to\mathbb{R}},\) the gradient \(\nabla f(x)\) at a point \(x\) is the direction of fastest ascent (increase) of \(f.\) That is, if one were to move slightly away from \(x\) in some direction, the value of \(f\) will increase the fastest if that direction is \(\nabla f(x),\) provided \(\nabla f(x)\ne0.\) The negative gradient \(-\nabla f(x)\) is the direction of fastest descent (decrease), provided \(\nabla f(x)\ne0.\)

If \(\nabla f(x)\ne0\) for a given \(x,\) then moving from \(x\) to a sufficiently close point \(x'\) in the direction of \(-\nabla f(x)\) is guaranteed to yield a strictly lower value of \(f.\) This is in fact true for any direction \(v\) such that the directional derivative \(\partial_{v}f(x)\) is negative. Recall that the directional derivative is given by \[\partial_{v}f(x)=\nabla f(x)\cdot v,\] (for any unit vector \(v,\) i.e. \(v\in\mathbb{R}^{d}\) s.t. \(|v|=1\)). Thus moving a small enough distance in any direction \(v\) with a negative inner product with \(\nabla f(x)\) is guaranteed to yield a point with a strictly smaller value of \(f.\) Equivalently, this holds if the direction \(v\) makes an angle of less than \(90\) degrees with the fastest descent direction \(-\nabla f(x).\) Similarly, moving in a direction with a positive inner product with \(\nabla f(x)\) (or equivalently with an angle of less than \(90\) degrees with the fastest ascent direction \(\nabla f(x)\)), is guaranteed to yield a point with a strictly larger value of \(f.\)

Only when moving in a direction \(v\) such that \(\nabla f(x)\cdot v=0\) - i.e. such that \(v\) is perpendicular to the fastest descent/ascent directions - are we unable to guarantee either decrease or increase (using only information on \(\nabla f(x)\)). See Figure 2.3 for an illustration when \(d=2\) and objective function \(f:\mathbb{R}^{2}\to\mathbb{R}\) given by \[f(x_{1},x_{2})=(x_{1}-x_{2})^{2}-x_{1}-x_{2}.\]

Tangent spaces

If \(h(x)=0\) is the implicit definition of a sufficiently smooth surface embedded in \(\mathbb{R}^{d},\) then at each point \(x\) on the surface it has a tangent hyperplane: an affine \(\mathbb{R}^{d-1}\)-dimensional subspace that touches the surface at \(x,\) and is the best linear approximation of the surface in any small enough neighborhood of \(x.\)

Starting at a point \(x\in I_{h}\) and moving a small distance while staying in \(I_{h}\) entails moving in a direction which lies in the tangent plane (to be more precise, for a sufficiently regular path \(x(t)\) starting at \(x(0)=x\) and staying in \(I_{h},\) the “initial direction” \(x'(0)\in\mathbb{R}^{d}\) must lie in the tangent plane at \(x\)).

The gradient \(\nabla h(x)\) is a normal vector to the tangent plane at \(x,\) i.e. a vector that is perpendicular to the tangent plane. That is, the tangent plane consists of all \(y\in\mathbb{R}^{d}\) such that \((y-x)\cdot\nabla h(x)=0.\) Figure 2.3 illustrates this for \(d=2\) and constraint function \(h:\mathbb{R}^{2}\to\mathbb{R}\) given by \[h(x_{1},x_{2})=\frac{1}{(x_{1}+1)^{2}+x_{2}^{2}+1}+\frac{1}{(x_{1}-1)^{2}+x_{2}^{2}+1}-\frac{9}{10}.\] Since \(d=2,\) the constraint \(h(x_{1},x_{2})=0\) defines a curve in the plane, and the tangent planes are lines.

Heuristic derivation of condition which constrained local minima must satisfy

Let us superimpose Figure 2.7, showing the curve defined by the constraint \(h(x)=0,\) onto Figure 2.3, showing the (fastest descent) directions of the objective function \(f(x).\) The result is Figure 2.1.

Fixing a starting point \(x\in I_{h}\) on the constraint surface and moving a small distance irrespective of the constraint, the fastest descent is achieved if moving in the direction \(-\nabla f(x),\) and a decrease by a non-zero amount is guaranteed if moving in an direction \(v\) with a negative inner product with \(\nabla f(x).\) This argument makes sense for \(d=2\) as in Figure 2.1, or for general \(d\ge2.\) If we are to move a small distance while maintaining the constraint (i.e. staying in \(I_{h}\)), the directions we can move are those in the tangent plane at \(x.\) Thus if the tangent plane at \(x\in I_{h}\) contains some direction that has a non-zero inner product with \(\nabla f(x),\) then one can move a small amount while staying in \(I_{h}\) such that \(f\) decreases strictly. Thus such an \(x\in I_{h}\) can not be a local minimum of \(f|_{I_{h}}.\) Conversely, if \(x\in I_{h}\) is a local minimum of \(f|_{I_{h}},\) then the tangent plane can not contain a vector with a non-zero inner product with \(\nabla f(x).\) But this condition is equivalent to \(\nabla f(x)\) being a normal vector to the tangent plane. Since \(\nabla h(x)\) is also a normal vector to the tangent plane, and normal vectors are unique up to multiplicative factor, this means that \(\nabla f(x)\) and \(\nabla h(x)\) must be collinear. That is there should exist a \(\lambda\in\mathbb{R}\) (the Lagrange multiplier) such that \[\nabla f(x)=\lambda\nabla h(x).\tag{2.8}\] Thus, under “normal circumstances”, a local minimum of \(f|_{I_{h}}\) should satisfy the equation (2.8). Note that if you can strictly decrease \(f\) by moving in one direction on the constraint surface, then you can strictly increase it by moving in the opposite direction along the surface. Thus local maxima should also satisfy the condition (2.8).

Using method of Lagrange multipliers

Now that we understand why local extrema of \(f\) subject to the constraint \(h(x)=0\) should satisfy (2.8), let us discuss how to use that equation in practice. To find potential local minima and maxima we solve for \(x\) and \(\lambda\) in the system of equations \[\begin{cases} \nabla f(x)=\lambda\nabla h(x),\\ h(x)=0. \end{cases}\tag{2.9}\] In Figure 2.1, the solutions of (2.9) are precisely the green dots on the constraint curve. The following figure plots the value of \(f(x)\) along the curve \(h(x)=0\) for the example in Figures 2.3-2.1. One can observe that the green dots indeed correspond precisely to the local minima and local maxima.

0.2.3 The method of Lagrange multipliers

Using the Lagrange multiplier conditions ([eq:lagrange_conditions_eq1])-([eq:lagrange_conditions_eq2]) to solve a constrained optimization problem is an instance of the method of Lagrange multipliers.

The equation \(\nabla f(x)=\lambda\nabla h(x)\) by itself is an unconstrained equation, which makes it easier to solve. Often one first solves for \(x\) in this equation for fixed \(\lambda,\) obtaining a family of solutions \(x(\lambda)\) indexed by \(\lambda.\) One then plugs a solution \(x(\lambda)\) into the second line of (2.9), and obtains an equation \(h(x(\lambda))=0\) for \(\lambda.\) The latter is also an unconstrained equation! If we find a solution \(\lambda_{*}\) of it, then \(x_{*}=x(\lambda_{*})\) satisfies the constraint and also the equation \(\nabla f(x_{*})=\lambda_{*}\nabla g(x_{*}),\) and is thus a candidate for the global optimizer of the constrained optimization problem.

Thus we have reduced the problem finding constrained local extrema to solving the two unconstrained equations (2.9) in sequence. This technique is the constrained analogue of solving the critical point equation \(\nabla f(x)=0\) in unconstrained optimization.

Consider the optimization problem \[\text{minimize }\:\:x+2y\:\:\text{ subject to }\:\:x^{2}+\frac{1}{2}y^{2}=1.\tag{2.10}\] It has objective function \(f(x,y)=x+2y\) and constraint function \(h(x,y)=x^{2}+\frac{1}{2}y^{2}-1.\) We seek local minima on the curve \(h(x,y)=0\) by solving \[\nabla f(x,y)=\lambda\nabla h(x,y)\quad\text{for}\quad\text{arbitrary fixed }\lambda\in\mathbb{R}.\tag{2.11}\] We have \[\nabla f(x,y)=\left(\begin{matrix}1\\ 2 \end{matrix}\right)\quad\text{and}\quad\nabla h(x,y)=\left(\begin{matrix}2x\\ y \end{matrix}\right).\] Thus the equation (2.11) for this optimization problem is the system of scalar equations \[\begin{cases} 1=\lambda2x,\\ 2=\lambda y, \end{cases}\tag{2.12}\] with unique solution \[(x(\lambda),y(\lambda))=\left(\frac{1}{2\lambda},\frac{2}{\lambda}\right),\tag{2.13}\] (when \(\lambda\ne0,\) and without solution when \(\lambda=0\)). We now solve \[h(x(\lambda),y(\lambda))=0\] in \(\lambda,\) to find the potential local minima on the constraint curve. Concretely, this equation reads \[x(\lambda)^{2}+\frac{1}{2}y(\lambda)^{2}=1\quad\quad\text{i.e.}\quad\quad\frac{1}{(2\lambda)^{2}}+\frac{1}{2}\left(\frac{2}{\lambda}\right)^{2}=1.\] This equation is easy to solve by multiplying both sides by \(\lambda^{2},\) and the full set of solutions is \(\lambda=\pm\frac{3}{2}.\) Thus \[(x,y)=\pm\left(\frac{1}{3},\frac{4}{3}\right)\tag{2.14}\] are potential local minima of the objective function restricted to the curve \(h(x,y)=0.\) Plugging (2.14) into \(f\) we see that the value of the objective function at these points is \[f\left(\pm\frac{1}{3},\pm\frac{4}{3}\right)=\pm\frac{1}{3}+2\left(\pm\frac{4}{3}\right)=\pm3.\] Thus we expect \(-3\) to be the value of the optimization problem (2.10), with minimizer given by \((x,y)=\) \(-\left(\frac{1}{3},\frac{4}{3}\right).\) Similarly, we expect the global maximum of \(f(x)\) on the curve \(h(x)=0\) to be \(3,\) with maximizer at \((x,y)=\left(\frac{1}{3},\frac{4}{3}\right).\) The figure below confirms the correctness of these conclusions.

Note that the above computation does not yet constitute a rigorous proof of these conclusions about the constrained global minimum and maximum. Indeed, below we will see that the procedure used here can fail to correctly identify the global minimum/maximum. We will also study conditions under which the procedure is guaranteed to be successful, which will turn the above computation into a rigorous proof.

0.2.4 Lagrange multipliers with multiple equality constraints

The problem \[\text{maximize }\,\:(x-y)^{2}-x-y+z\,\:\text{ subject to }\,\:\begin{cases} \left(x-\frac{1}{4}\right)^{2}+2y^{2}+z^{2}=1,\\ x+y+z=1, \end{cases}\tag{2.16}\] is a optimization problem with two equality constraints.

The general problem with objective function \(f:\mathbb{R}^{d}\to\mathbb{R}\) and \(k\) constraint functions \(h_{i}:\mathbb{R}^{d}\to\mathbb{R}\) takes the form \[\text{minimize }\:f(x)\:\text{ subject to }\,h_{i}(x)=0\text{ for all }i=1,\ldots,k.\tag{2.17}\]

A more compact formulation is to let \(h:\mathbb{R}^{d}\to\mathbb{R}^{k}\) be the function \((h_{1},\ldots,h_{k}),\) so that all \(k\) scalar constraints (2.17) are combined in the vector constraint \(h(x)=0.\) Then (2.17) is written as as follows.

For \(d\ge k\ge1,\) \(D\subset\mathbb{R}^{d},\) objective function \(f:D\to\mathbb{R}\) and constraint function \({h:D\to\mathbb{R}^{k}}\) we call \[\text{minimize }\:f(x),x\in D\:\text{ subject to }h(x)=0.\tag{2.18}\] an optimization/minimization/maximization problem with \(k\) equality constraints.

Definition 2.2 is the special case \(k=1\) of Definition 2.5.

The Lagrange multiplier method generalizes to these problems, whereby the equation that local extrema satisfy is the following.

(Lagrange multiplier condition - \(k\) equality constraints). If \(d\ge1\) and \(f,g:\mathbb{R}^{d}\to\mathbb{R}\) are differentiable and \(\lambda_{1},\ldots,\lambda_{k}\in\mathbb{R}\) (the \(k\) Lagrange multipliers), then we call \[\begin{aligned} \nabla f(x) & =\sum_{i=1}^{k}\lambda_{i}\nabla h_{i}(x),\tag{}\\ h(x) & =0,\tag{} \end{aligned}\] the Lagrange multiplier conditions of the constrained optimization problem (2.18).

To see why this condition makes sense, note that a surface \(I_{h}\) defined by \(h_{1}(x)=\ldots=h_{k}(x)=0\) will usually be \(d-k\) dimensional, with tangent space of dimension \(k.\) This tangent space is perpendicular to the \(d-k\) dimensional normal space. Under appropriate conditions, the normal space at a point \(x\) is the subspace of \(\mathbb{R}^{d}\) spanned by \(\nabla h_{1}(x),\ldots,\nabla h_{k}(x).\) Thus ([eq:ch3_multiple_equality_constraints_lagrange_equation]) is an expression of the fact that for a local extremum \(x\) of \(f|_{I_{h}}\) the fastest descent direction \(-\nabla f\) should be perpendicular to the tangent space, i.e. it should lie in the normal space. This naturally generalizes Definition 2.3 from the case of \(k=1\)-dimensional normal space and \(d-1\) dimensional tangent space to the case of \(k\)-dimensional normal space and \(d-k\) dimensional tangent space.

Consider the optimization problem \[\text{maximize }\:\:c\:\:\text{ subject to }\,\,\begin{cases} \frac{1}{2}(a^{2}+b^{2}+c^{2})=1,\text{ and}\\ a+b+c=\frac{1}{2}. \end{cases}\tag{2.19}\] The objective function is \(f(a,b,c)=c\) with \[\nabla f(a,b,c)=\left(\begin{matrix}0\\ 0\\ 1 \end{matrix}\right)\] and the constraint functions are \(h_{1}(a,b,c)=\frac{1}{2}(a^{2}+b^{2}+c^{2})-1\) and \(h_{2}(a,b,c)=a+b+c-\frac{1}{2}\) with \[\nabla h_{1}(a,b,c)=\left(\begin{matrix}a\\ b\\ c \end{matrix}\right)\quad\text{and}\quad\nabla h_{2}(a,b,c)=\left(\begin{matrix}1\\ 1\\ 1 \end{matrix}\right).\] In this problem the Lagrange multiplier equation ([eq:ch3_multiple_equality_constraints_lagrange_equation]) is thus the system \[\left(\begin{matrix}0\\ 0\\ 1 \end{matrix}\right)=\lambda_{1}\left(\begin{matrix}a\\ b\\ c \end{matrix}\right)+\lambda_{2}\left(\begin{matrix}1\\ 1\\ 1 \end{matrix}\right)\] of three scalar equations. For \(\lambda_{1}=\lambda_{2}=0\) there are no solutions \((a,b,c)\) to these equations. Likewise, for \(\lambda_{1}=0,\lambda_{2}\ne0\) there are no solutions. If \(\lambda_{1}\ne0\) the unique solution is \[x(\lambda_{1},\lambda_{2}):=\left(\begin{matrix}a(\lambda_{1},\lambda_{2})\\ b(\lambda_{1},\lambda_{2})\\ c(\lambda_{1},\lambda_{2}) \end{matrix}\right):=\left(\begin{matrix}-\frac{\lambda_{2}}{\lambda_{1}}\\ -\frac{\lambda_{2}}{\lambda_{1}}\\ \frac{1-\lambda_{2}}{\lambda_{1}} \end{matrix}\right).\] For any \(\lambda_{1}\ne0,\lambda_{2}\in\mathbb{R},\) it holds that \(\nabla f(x(\lambda_{1},\lambda_{2}))=\lambda_{1}\nabla h_{1}(x(\lambda_{1},\lambda_{2}))+\lambda_{2}\nabla h_{2}(x(\lambda_{1},\lambda_{2})).\) Next we solve the equations \(h_{1}(x(\lambda_{1},\lambda_{2}))=h_{2}(x(\lambda_{1},\lambda_{2}))=0\) in \(\lambda_{1},\lambda_{2}.\) Using the form \(h_{1},h_{2}\) and \(x(\lambda_{1},\lambda_{2})\) we see that these equations reduce to \[\begin{cases} \frac{\lambda_{2}^{2}}{\lambda_{1}^{2}}+\frac{1}{2}\frac{(1-\lambda_{2})^{2}}{\lambda_{1}^{2}}=1,\\ -2\frac{\lambda_{2}}{\lambda_{1}}+\frac{1-\lambda_{2}}{\lambda_{1}}=\frac{1}{\sqrt{2}}. \end{cases}\] The second equation implies \(\sqrt{2}(1-3\lambda_{2})=\lambda_{1},\) which with the fist equation gives \[\lambda_{2}^{2}+\frac{1}{2}(1-\lambda_{2})^{2}=2(1-3\lambda_{2})^{2}.\] This equation has solutions \[\lambda_{2}=\frac{1\pm\sqrt{\frac{2}{11}}}{3}.\] Plugging these into \(\sqrt{2}(1-3\lambda_{2})=\lambda_{1}\) and solving for \(\lambda_{1}\) yields \[\lambda_{1}=\mp\frac{2}{\sqrt{11}}.\] We thus have found the (candidates for) local extrema \[(a,b,c)=\left(\frac{\sqrt{11}+\sqrt{2}}{6},\frac{\sqrt{11}+\sqrt{2}}{6},-\frac{\sqrt{11}}{3}+\frac{\sqrt{2}}{6}\right)\] and \[(a,b,c)=\left(-\frac{\sqrt{11}-\sqrt{2}}{6},-\frac{\sqrt{11}-\sqrt{2}}{6},\frac{\sqrt{11}}{3}+\frac{\sqrt{2}}{6}\right).\] Plugging these into \(f(a,b,c)\) we obtain the corresponding values of the objective function as \[-\frac{\sqrt{11}}{3}+\frac{\sqrt{2}}{6}\quad\text{and}\quad\frac{\sqrt{11}}{3}+\frac{\sqrt{2}}{6}.\] Since the latter is larger we expect it to be the value of (2.19) (the former should be the result of minimizing \(c\) subject to the same constraints).

As for Example 2.4, this computation does not yet constitute a rigorous proof that the result is indeed the global minimum of the optimization problem.

0.2.5 Lagrange multiplier theorem

We will now formulate a rigorous theorem that provides conditions on \(f\) and \(h\) which guarantee that all local extrema \(x\) of \(f|_{I_{h}}\) are solutions of the Lagrange equation ([eq:ch3_multiple_equality_constraints_lagrange_equation]), for some values of the Lagrange multipliers \(\lambda_{1},\ldots,\lambda_{k}.\) Beyond differentiability of \(f,h,\) the condition we need is that the gradients \(\nabla h_{1}(x),\ldots,\nabla h_{k}(x)\) of each of the \(k\) constraint functions are linearly independent for all \(x\in I_{h}.\)

Let \(d\ge k\ge1\) and \(D\subset\mathbb{R}^{d}\) be open, and let \(f:D\to\mathbb{R}\) and \(h_{i}:D\to\mathbb{R},i=1,\ldots,k,\) be continuously differentiable. Let \(I_{h}=\{x\in D:h_{1}(x)=\ldots=h_{k}(x)=0\}.\) If \(x\in I_{h}\) is a local extremum of \(f|_{I_{h}}\) and \(\nabla h_{1}(x),\ldots,\nabla h_{k}(x)\in\mathbb{R}^{d}\) are linearly independent, then there exists a vector \(\lambda\in\mathbb{R}^{d}\) such that \[\nabla f(x)=\sum_{i=1}^{d}\lambda_{i}\nabla h_{i}(x).\tag{2.21}\]

2.9.

If the condition of theorem is satisfied, the method of Lagrange multipliers is guaranteed to find all constrained local extrema (and thus also the constrained global extrema).

When \(k=1\) the condition that “\(\nabla h_{1}(x)\) is linearly independent” is just an unusual way to express the condition \({\nabla h_{1}(x)\ne0}.\)

The proof of Theorem 2.20 is non-examinable. If you are interested it is given in the appendix.

In Example 2.4, the single constraint function \(h(x,y)=x^{2}+\frac{1}{2}y^{2}-1\) has a non-zero gradient everywhere except at \(x=y=0.\) Since this point does not lie in the constraint set, the conditions of Theorem 2.4 are satisfied. Thus we can be certain that in finding all solutions of the equation 2.11 we have examined all local minimizers. Since the constraint set is compact, one of these must be a global minimizer. Thus with Theorem 2.4 the argument of Example 2.4 turns into a rigorous proof.

In Example 2.7 the two constraint functions \(h_{1}(a,b)=\frac{1}{2}(a^{2}+b^{2}+c^{2})-1\) and \(h_{2}(a,b)=a+b+c-\frac{1}{2}\) have gradients \(\nabla h_{1}(a,b)=(a,b,c)\) and \(\nabla h_{2}(a,b)=(1,1,1),\) which are linearly independent except if \(a=b=c.\) No such point satisfies both \(h_{1}(a,b,c)=0\) and \(h_{2}(a,b,c)=0,\) so the constraint set \(I_{h}\) contains no such point. Again since \(I_{h}\) set is closed, using Theorem 2.4 gives us absolute certainty that we indeed found the global minimizer in Example 2.4.

We now give an example where the condition of Theorem 2.20 is not satisfied, and where the method of Lagrange multipliers fails to find the global minimum.

Consider the optimization problem \[\text{minimize }\:y\text{ subject to }\frac{1}{3}(y-1)^{3}-\frac{1}{2}x^{2}=0,\] with objective function \(f(x,y)=y\) and single constraint function \(h(x,y)=\frac{1}{3}(y-1)^{3}-\frac{1}{2}x^{2}.\) We have \[\nabla f(x,y)=\left(\begin{matrix}0\\ 1 \end{matrix}\right)\quad\text{and}\quad\nabla h(x,y)=\left(\begin{matrix}x\\ (y-1)^{2} \end{matrix}\right).\] The Lagrange multiplier equation (2.21) for this problem is thus \[\left(\begin{matrix}0\\ 1 \end{matrix}\right)=\lambda\left(\begin{matrix}x\\ (y-1)^{2} \end{matrix}\right).\] There is no solution for \(\lambda=0.\) If \(\lambda\ne0\) then the unique solutions are \[x(\lambda)=0\quad\quad y(\lambda)=1\pm\frac{1}{\sqrt{\lambda}}.\] For these the constraint \(\frac{1}{3}(y(\lambda)-1)^{3}-\frac{1}{2}x(\lambda)^{2}=0\) simplifies to \(\lambda^{-1}=0,\) so there is no \(\lambda\) such that \((x(\lambda),y(\lambda)\)) satisfies the constraint. Thus the method of Lagrange multipliers has failed to identify any local extremum for the problem.

The following plot of the constraint curve \(h(x,y)=0\) makes it evident that \((x,y)=(0,1)\) is the global minimum of \(f|_{I_{h}}.\)

Indeed, a point \((x,y)\) on the curve always has \(y\ge1,\) with equality only if \((x,y)=(0,1).\) Since \(f(x,y)=y\) this implies that \(z_{*}=(0,1)\) is the global minimum. It was not found by the method of Lagrange multipliers because \(\nabla f(z_{*})=(0,1)\ne0\) while \(\nabla h(z_{*})=0.\) Thus there is obviously no Lagrange multiplier \(\lambda\) such that \(\nabla f(z_{*})=\lambda\nabla h(z_{*}).\)

While in this example \(f\) and \(h\) are both continuously differentiable, the fact that \(\nabla h(z_{*})=0\) for the point \(z_{*}\) on the constraint curve means that the condition of Theorem 2.20 is not satisfied. Thus Theorem 2.20 does not contradict the failure of Lagrange multipliers in this case.

Alternatively, note the singular shape of the curve around \(z_{*},\) which is that of \(y=1+c|x|^{2/3}\) for appropriate \(c>0.\) Because of this shape, there are many distinct tangent lines to the curve at this point. This non-uniqueness invalidates the heuristic derivation in Section [sec:ch2_lagrange_mult]. This kind of singular shape is only possible at points on the curve s.t. \(\nabla h(x)=0.\)

0.2.6 Lagrangian function and dual optimization problem

Another perspective on Lagrange multipliers involves an auxiliary function called the Lagrangian function and a corresponding dual optimization problem. Consider the general minimization with equality constraints of the form (2.18). The result of this minimization problem can also be written \[\inf_{x\in D:h(x)=0}f(x).\tag{2.23}\]

For any constrained optimization with equality constraints as in Definition 2.5, the Lagrangian function (or just Lagrangian) is the function \[L:D\times\mathbb{R}^{k}\to\mathbb{R},\qquad L(x,\lambda)=f(x)+\lambda^{T}h(x).\tag{2.24}\]

If \(f\)and \(h\) are differentiable, then so is \(L\) and \[\nabla_{x}\{L(x,\lambda)\}=\nabla f(x)+\lambda^{T}\nabla h(x),\quad\text{and}\quad\nabla_{\lambda}\{L(x,\lambda)\}=h(x).\tag{2.25}\] For a critical point \((x_{*},\lambda_{*})\) of \(L\) both gradients in (2.25) vanish, so then \(x_{*}\) satisfies the constraint \(h(x_{*})=0,\) and \(x_{*},\lambda_{*}\) satisfy the Lagrange equation ([eq:ch3_multiple_equality_constraints_lagrange_equation]) of the previous sections (after flipping the sign of \(\lambda\))! Conversely, under the conditions of Theorem 2.20, for any local optimizer \(x_{*}\) there exists a \(\lambda_{*}\) s.t. \((x_{*},\lambda_{*})\) is a critical point of \(L.\) However, the Lagrangian \(L(x,\lambda)\) is well-defined also if \(f,h\) are not differentiable.

For any Lagrangian as in Definition 2.12, the dual function of the Lagrangian is the function \[q:\mathbb{R}^{k}\to\mathbb{R},\qquad q(\lambda)=\inf_{x\in D}L(x,\lambda).\]

Note that the \(\inf\) in the dual function is not subject to the constraint \(h(x)=0.\)

For any dual function as in Definition 2.13, the optimization problem \[\sup_{\lambda\in\mathbb{R}^{k}}q(\lambda)\] is called the dual optimization problem. The original optimization problem (2.23) is called the primal problem.

The dual optimization problem is also an unconstrained optimization.

The Lagrangian and dual function provide a variational lower bound for the infimum in (2.23), as the following lemma proves.

For any \(d\ge1,k\ge1,\) \(D\subset\mathbb{R}^{d}\) and \(f:D\to\mathbb{R},\) \(h:D\to\mathbb{R}^{k}\) it holds that \[\sup_{\lambda\in\mathbb{R}^{k}}q(\lambda)=\sup_{\lambda\in\mathbb{R}^{k}}\inf_{x\in D}L(x,\lambda)\le\inf_{x\in D:h(x)=0}f(x).\tag{2.27}\]

Proof. If \(h(x)=0\) then \(L(x,\lambda)=f(x)\) for all \(\lambda\in\mathbb{R}^{k}.\) Thus for all \(\lambda\) \[\inf_{x\in D:h(x)=0}L(x,\lambda)=\inf_{x\in D:h(x)=0}f(x).\] This implies that for all \(\lambda\) \[\inf_{x\in D}L(x,\lambda)\le\inf_{x\in D:h(x)=0}f(x).\] Maximizing both sides over \(\lambda\) proves (2.27).

Note that the dual problem on the r.h.s. of (2.27) is a double optimization, but each of them is unconstrained. We have thus removed the constraint, at the cost of adding an optimization over \(\lambda.\) In favorable situations the inequality in (2.27) is in fact an equality, and the constrained minimum in (2.23) can be computed by solving the dual problem. Several examples of this are given below.

For a maximization problem, the primal problem is of the form \[\sup_{x\in D:h(x)=0}f(x).\] Its dual function is \[q(\lambda)=\sup_{x\in D}L(x,\lambda),\] and the dual optimization problem is \[\inf_{\lambda\in\mathbb{R}^{k}}q(\lambda)=\inf_{\lambda\in\mathbb{R}^{k}}\sup_{x\in D}L(x,\lambda).\] The variational bound is now an upper bound for the optimal value of the primal problem, namely \[\sup_{x\in D:h(x)=0}f(x)\le\inf_{\lambda\in\mathbb{R}^{k}}q(\lambda)=\inf_{\lambda\in\mathbb{R}^{k}}\sup_{x\in D}L(x,\lambda).\tag{2.28}\]

We revisit Example 2.4, and this time approach it by considering the Lagrangian function and its dual. We write the optimization problem (2.10) equivalently as \[\inf_{x,y\in\mathbb{R}:x^{2}+\frac{1}{2}y^{2}=1}\{x+2y\}.\tag{2.29}\] According to (2.24) the Lagrangian for this problem is \[L((x,y),\lambda)=x+2y+\lambda\left(x^{2}+\frac{1}{2}y^{2}-1\right),\tag{2.30}\] and the dual function is \[q(\lambda)=\inf_{x,y\in\mathbb{R}}L((x,y),\lambda).\] By Lemma 2.26 we know that \[\sup_{\lambda\in\mathbb{R}}q(\lambda)=\sup_{\lambda\in\mathbb{R}}\inf_{x,y\in\mathbb{R}}L((x,y),\lambda)\le\inf_{x,y\in\mathbb{R}:x^{2}+\frac{1}{2}y^{2}=1}\{x+2y\}.\] For any fixed \(\lambda,\) the expression (2.30) is a quadratic in \(x,y.\) If \(\lambda<0,\) then the highest order terms in (2.30) tend to \(-\infty\) e.g. if \(x,y\to\infty,\) which means that \[\inf_{x,y\in\mathbb{R}}L((x,y),\lambda)=-\infty\:\:\text{ if }\:\:\lambda<0.\] If \(\lambda=0\) the same holds since \(x+2y\to-\infty\) if \(y=0,x\to-\infty.\) If \(\lambda>0,\) then the quadratic \((x,y)\to L((x,y),\lambda)\) is convex. Therefore we are guaranteed to find a global minimizer by solving the critical point equation \(\nabla_{z}L(z,\lambda)=0\) (where \(z=(x,y)\)). Since \(\partial_{x}L((x,y),\lambda)=1+2x\lambda\) and \(\partial_{y}L((x,y),\lambda)=2+y\lambda\) the critical point equation is the system \[\begin{cases} 1+2x\lambda=0,\\ 2+2y\lambda=0. \end{cases}\tag{2.31}\] This is the system (2.12) we already solved in Example 2.4, up to the change of sign. It is easy to see that for \(\lambda=0\) there is no solution, and for \(\lambda=0\) the unique solution is \[(x(\lambda),y(\lambda))=\left(-\frac{1}{2\lambda},-\frac{2}{\lambda}\right),\tag{2.32}\] (cf. (2.13)). Plugging this solution into \(L\) we obtain \[\begin{array}{ccl} L(x(\lambda),y(\lambda),\lambda) & = & -\frac{1}{2\lambda}+2\left(-\frac{2}{\lambda}\right)+\lambda\left(\left(-\frac{1}{2\lambda}\right)^{2}+\frac{1}{2}\left(-\frac{2}{\lambda}\right)^{2}-1\right)\\ & = & -\frac{9}{4\lambda}-\lambda. \end{array}\] Thus the dual function is given by \[q(\lambda)=\inf_{x,y\in\mathbb{R}}L((x,y),\lambda)=\begin{cases} -\infty & \text{if }\lambda\le0,\\ -\frac{9}{4\lambda}-\lambda & \text{\,if }\lambda>0. \end{cases}\] The dual problem is then \[\sup_{\lambda\in\mathbb{R}}q(\lambda)=\sup_{\lambda>0}\left\{ -\frac{9}{4\lambda}-\lambda\right\} .\] The function \(\lambda\to-\frac{9}{4\lambda}-\lambda\) has critical point equation \(\frac{9}{4\lambda^{2}}-1=0,\) which clearly has a unique positive solution \(\lambda_{*}=\frac{3}{2}.\) Since \(-\frac{9}{4\lambda}-\lambda\to-\infty\) for \(\lambda\downarrow0\) and \(\lambda\uparrow\infty,\) this must be the global maximizer (alternatively, one can easily check that \(\lambda\to-\frac{9}{4\lambda}-\lambda\) is concave by computing the second derivative, so a critical point is always a global maximizer). Thus \[\sup_{\lambda\in\mathbb{R}}q(\lambda)=-\frac{9}{4\lambda_{*}}-\lambda_{*}=-\frac{9}{4\cdot\frac{3}{2}}-\frac{3}{2}=-3.\tag{2.33}\] Note that this is precisely the value we argued was the global minimum in Example 2.4. In Example 2.10 we saw how Theorem 2.20 can be used conclude rigorously that this is indeed the global minimum.

In the alternative Lagrange dual approach, we obtain thanks to Lemma 2.26 the rigorous lower bound \[-3\le\inf_{x,y\in\mathbb{R}:x^{2}+\frac{1}{2}y^{2}=1}\{x+2y\}.\tag{2.34}\] In Example 2.4 we explicitly solved for \((x,y)\) on the curve \(x^{2}+\frac{1}{2}y^{2}=1,\) and successfully found the point \((x_{*},y_{*})=\left(-\frac{1}{3},-\frac{4}{3}\right)\) for which \(x_{*}+2y_{*}=-3.\) Using this knowledge we trivially obtain \[\inf_{x,y\in\mathbb{R}:x^{2}+\frac{1}{2}y^{2}=1}\{x+2y\}\le x_{*}+2y_{*}=-3.\tag{2.35}\] Since (2.34) and (2.35) prove that \(-3\) is both an upper and a lower bound of the \(\inf,\) we have obtained a rigorous proof that \[\inf_{x,y\in\mathbb{R}:x^{2}+\frac{1}{2}y^{2}=1}\{x+2y\}=-3.\] The solution \((x_{*},y_{*})\) computed in Example 2.4 can also be obtained directly from computation here, as follows. Take the optimizer \(\lambda=\lambda_{*}=\frac{3}{2}\) for \(\lambda\) and plug it into (2.32), giving \[(x(\lambda_{*}),y(\lambda_{*}))=\left(-\frac{1}{2\lambda_{*}},-\frac{2}{\lambda_{*}}\right)\overset{\lambda_{*}=\frac{3}{2}}{=}\left(-\frac{1}{3},-\frac{4}{3}\right).\] We can verify that \(h(x(\lambda_{*}),y(\lambda_{*}))=0\) by simply explicitly computing \[x(\lambda_{*})^{2}+\frac{1}{2}y(\lambda_{*})^{2}-1=\left(-\frac{1}{3}\right)^{2}+\frac{1}{2}\left(-\frac{4}{3}\right)^{2}-1=\frac{1}{9}+\frac{8}{9}-1=0.\] Then \[\inf_{x,y\in\mathbb{R}:x^{2}+\frac{1}{2}y^{2}=1}\{x+2y\}\le x(\lambda_{*})+2y(\lambda_{*})=-\frac{1}{3}-2\frac{4}{3}=-3,\] reproducing (2.35) without relying on the computation in Example 2.4.

Unlike in Example 2.4, here we did not explicitly solve for \(x_{*},y_{*}\) that satisfy the constraint, but somewhat “magically” the maximizer \(\lambda_{*}\) “selected” a point \(x(\lambda_{*}),y(\lambda_{*})\) satisfying the constraint. At the end of the section we will prove that under certain conditions this convenient outcome is guaranteed.

Consider the optimization problem \[\text{minimize }\:\:x^{4}-x^{2}+y^{2}\:\:\text{ subject to }\:\:x^{2}+xy=\frac{1}{16},\tag{2.37}\] or equivalently \[\inf_{x,y\in\mathbb{R}:x^{2}+xy=\frac{1}{16}}\left\{ x^{4}-x^{2}+y^{2}\right\} .\] The Lagrangian of the problem is \[L((x,y),\lambda)=x^{4}-x^{2}+y^{2}+\lambda\left(x^{2}+xy-\frac{1}{16}\right).\] We compute \[\sup_{\lambda\in\mathbb{R}}\inf_{x,y\in\mathbb{R}}L((x,y),\lambda).\] Consider first the dual function \[q(\lambda)=\inf_{x,y\in\mathbb{R}}L((x,y),\lambda).\] We have \[\begin{array}{ccl} {\displaystyle q(\lambda)} & = & {\displaystyle \inf_{x,y\in\mathbb{R}}}\left\{ x^{4}-x^{2}+y^{2}+\lambda(x^{2}+xy-\frac{1}{16})\right\} \\ & = & {\displaystyle \inf_{x\in\mathbb{R}}}\left\{ x^{4}-x^{2}+\lambda x^{2}+{\displaystyle \inf_{y\in\mathbb{R}}}\left(y^{2}+\lambda xy\right)\right\} -\frac{\lambda}{16} \end{array}\] The function \(y\to y^{2}+\lambda xy\) is quadratic and uniquely minimized by \[y=-\frac{\lambda x}{2}\tag{2.38}\] so \[{\displaystyle \inf_{y\in\mathbb{R}}}\left(y^{2}+\lambda xy\right)=\frac{\lambda^{2}x^{2}}{4}-\frac{\lambda^{2}x^{2}}{2}=-\frac{\lambda^{2}x^{2}}{4}.\] Thus \[\begin{array}{cl} {\displaystyle q(\lambda)} & =\inf_{x\in\mathbb{R}}\left\{ x^{4}-x^{2}+\lambda x^{2}-\frac{\lambda^{2}x^{2}}{4}\right\} -\frac{\lambda}{16}\\ & =\inf_{x\in\mathbb{R}}\left\{ x^{4}+x^{2}\left\{ -1+\lambda-\frac{\lambda^{2}}{4}\right\} \right\} -\frac{\lambda}{16}\\ & =\inf_{x\in\mathbb{R}}\left\{ x^{4}-x^{2}\left(\frac{\lambda}{2}-1\right)^{2}\right\} -\frac{\lambda}{16}. \end{array}\] Using the change of variables \(a=x^{2}\) the \(\inf\) over \(x\) turns into the quadratic minimization \[\inf_{a\ge0}\left\{ a^{2}-a\left(\frac{\lambda}{2}-1\right)^{2}\right\} \overset{a=\frac{1}{4}\left(\frac{\lambda}{2}-1\right)}{=}-\frac{1}{4}\left(\frac{\lambda}{2}-1\right)^{4}.\tag{2.39}\] Thus \[{\displaystyle q(\lambda)}=-\frac{1}{4}\left(\frac{\lambda}{2}-1\right)^{4}-\frac{\lambda}{16}.\] It remains to solve the dual problem \[\sup_{\lambda\in\mathbb{R}}{\displaystyle q(\lambda)}=\sup_{\lambda\in\mathbb{R}}\left\{ -\frac{1}{4}\left(\frac{\lambda}{2}-1\right)^{4}-\frac{\lambda}{16}\right\} .\] This function of \(\lambda\) clearly tends to \(-\infty\) as \(\lambda\to\pm-\infty,\) so its maximum must be a critical point, i.e. a solution of \[-\frac{1}{2}\left(\frac{\lambda}{2}-1\right)^{3}-\frac{1}{16}=0.\] It is easy to show that this equation has the unique solution \(\lambda=1.\) Thus \[\sup_{\lambda\in\mathbb{R}}\inf_{x,y\in\mathbb{R}}L((x,y),\lambda)=-\frac{1}{4}\left(\frac{1}{2}-1\right)^{4}-\frac{1}{16}=-\frac{5}{64}.\] Thanks to Lemma 2.26 we now have the lower bond \[-\frac{5}{64}\le\inf_{x,y\in\mathbb{R}:x^{2}+xy=\frac{1}{16}}\left\{ x^{4}-x^{2}+y^{2}\right\} .\tag{2.40}\] Let us try to match this with an upper bound. Plugging the solution \(\lambda=1\) into the minimizers of \(a,x,y\) from (2.38) and (2.39) above, we obtain \(a_{*}=\frac{1}{4}\left(\frac{1}{2}\right)^{2}=\frac{1}{8},\) \(x_{*}=\sqrt{a}=\frac{1}{2\sqrt{2}}\) and \(y_{*}=-\frac{1\cdot\frac{1}{2\sqrt{2}}}{2}=-\frac{1}{4\sqrt{2}}.\) We can directly verify that \[x_{*}^{2}+x_{*}y_{*}=\left(\frac{1}{2\sqrt{2}}\right)^{2}+\frac{1}{2\sqrt{2}}\left(-\frac{1}{4\sqrt{2}}\right)=\frac{1}{8}-\frac{1}{16}=\frac{1}{16},\] so \((x_{*},y_{*})\) satisfy the constraint. Thus \[\inf_{x,y\in\mathbb{R}:x^{2}+xy=\frac{1}{16}}\left\{ x^{4}-x^{2}+y^{2}\right\} \le x_{*}^{4}-x_{*}^{2}+y_{*}^{2}.\] Furthermore \[x_{*}^{4}-x_{*}^{2}+y_{*}^{2}=\frac{1}{64}-\frac{1}{8}+\frac{1}{32}=-\frac{5}{64}.\] This indeed matches the lower bound (2.40), so we have proved that \[\inf_{x,y\in\mathbb{R}:x^{2}+xy=\frac{1}{16}}\left\{ x^{4}-x^{2}+y^{2}\right\} =-\frac{5}{64}.\]